Launched this week

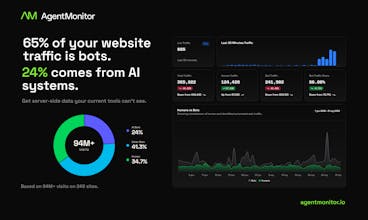

Agent Monitor captures and classifies AI & bot traffic using server-side data. Across 94M+ visits on 249 sites, 65% of traffic was bots - 24% AI bots like ChatGPT, Gemini, and Claude. None of this appears in GA4. We use transparent server-side signals to classify every visit. Get bot profiles, per-bot rankings, AI assistant traffic, and global benchmarks. Built by an SEO agency that needed real data.

Agent Monitor

PicWish

@marcin_kaminski handles Manus or similar?

Agent Monitor

@mohsinproduct Yes. Manus, Operator and similar are detected and labeled as AI Agents. Check out the attached screenshot.

@marcin_kaminski Last time I audited a client site, GA4 showed a 15% traffic dip but conversions were flat. Took two days digging through raw Nginx logs to realize AI crawlers were the gap. Agent Monitor doing deterministic classification server-side is the right call... makes it auditable when you need to explain the numbers to someone who doesn't trust ML labels.

Agent Monitor

@tereza_hurtova Thank you so much. Great question!

Let me explain our approach to this. Bots for competitive research or price monitoring aren't really "valuable traffic" for us as site owners. 99% of the time it's just regular bots. We monitor them (because we literally monitor all traffic on the site) but we don't classify them.

However, if someone is using Operator from OpenAI or Manus, that traffic is definitely detected and interpreted as an AI Agent.

Hope that clarifies it :) The whole topic of AI traffic, monitoring and classifying it is really fresh and still challenging. We're doing our best to classify everything we can. Right now this is the only proper way to analyze how people are using AI assistants like ChatGPT.

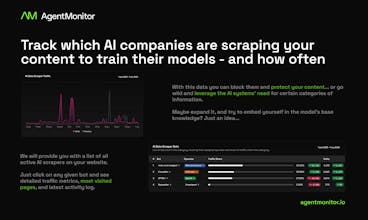

The biggest value right now and in the near future are bots like ChatGPT-User - AI assistants browsing the web on behalf of us humans. This was very visible just before Christmas when traffic from these assistants was huge in e-commerce stores. It means this traffic is already very important... but still unnoticed by most people :)

@marcin_kaminski Thanks a lot for the detailed explanation! I really appreciate the transparency. Your approach makes a lot of sense.

The pace at which this all is evolving is wild. I’ve been in marketing + tech for 10+ years and I don’t think I’ve ever experienced a shift this fast! The last year alone has been a complete whirlwind. But at least we’re not bored. 😅

Wishing you strong momentum with this!

Agent Monitor

Hey @tereza_hurtova!

Some AI operators don't play nice and send out bots that look and behave exactly like humans. We wouldn't be surprised if many of them appeared in GA4 and muddied the stats.

To expand on what our CEO mentioned about Manus AI — it's actually a great example of the "mimicking" problem you asked about.

Manus doesn't use any unique signatures, making it surprisingly hard to identify with standard tools. However, we have some cool technical tricks and algorithms up our sleeves and so far, we’ve had great success in pinpointing those pesky bots! :)

This is exactly why we went the server-side signals route. Since we analyze traffic at the infrastructure level rather than relying on client-side JS (like GA4), we have the hard data needed to figure out who each bot really belongs to.

@xwirkijowski That’s fascinating! And slightly terrifying at the same time. 😅 The mimicking problem is exactly what makes this whole space so tricky. If agents start behaving indistinguishably from humans at the client side, relying purely on JS-based analytics feels increasingly fragile.

The infrastructure-level angle makes a lot of sense in that context. Out of curiosity - Do you think we’ll eventually need some kind of standardized "AI agent disclosure" layer on the web? Or will this remain an ongoing cat-and-mouse game?

Really appreciate you sharing the technical nuance here!

Agent Monitor

Happy to nerd out about this @tereza_hurtova!

There are actually some great emerging practices in the space. We’ve noticed that OpenAI recently started following the lead of Microsoft and Google by setting a "From" header in most cases. OpenAI was also one of the first operators we saw adopting the Signature-Agent headers, which are part of an actual standard (RFC 9421) to cryptographically verify bot identities!

I do believe more companies will implement this standard, effectively creating that "agent disclosure" layer you mentioned. However, there’s a flip side: some operators have a strong incentive to stay anonymous to avoid being blocked.

On a sidenote, you touched on a bot impersonating human issue, but our product also has functionality to verify if, for example, that Googlebot visit you just had was actually from Google, or if it's someone else scraping your content while pretending to be them. 😅

So, in my opinion, it’ll be a mix. A standardized layer for the "good citizens" of the new AI world, and an ongoing cat-and-mouse game for everyone else.

But hey, that's what makes our job interesting! :D

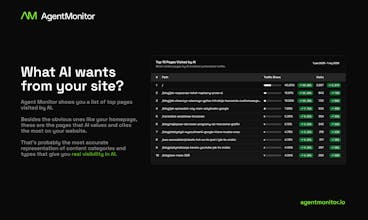

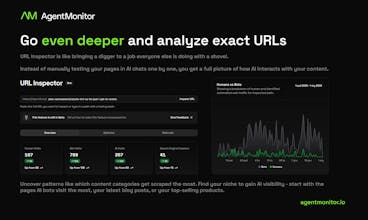

What I like most is the feature that lets you check specific URLs and see how often they’re visited by AI agents. Thanks to this, I can quickly verify whether, after publishing new content on our clients’ websites, those subpages are actually being visited by AI agents. This gives me a clear signal of whether we have a chance to appear in LLM-generated answers.

The option that shows the time of the first bot visit is also very valuable - it tells me how long it takes for bots to discover and reach a new URL.

@grzegorz_slo Thanks! URL Inspector is actually still in beta, but it's already one of the features used the most day-to-day. Checking how fast AI bots pick up new content gives a pretty clear signal of whether those pages have a shot at appearing in AI-generated answers.

We have a lot of ideas for where to take it next, so stay tuned :)

@grzegorz_slo @adam_przybylowicz Microsoft Clarity shipped a Bot Activity dashboard using CDN server logs, but it only gives aggregate operator breakdowns. Agent Monitor doing per-URL first-visit timing is a sharper signal for whether content has a shot at LLM citation. Knowing ChatGPT-User hit a page 6 hours after publish vs. 3 days later is the kind of granularity Clarity doesn't touch.

Agent Monitor

@grzegorz_slo Great to hear! Thanks for testing it out.

This feature was built exactly for this purpose - so everyone can check if and how often the content we create is being visited by AI assistants like ChatGPT.

Really happy it's already helpful for you.

So this is a SEO tool for bot traffic? Very cool. ik GTM needs heavy setup, but does Agent Monitor's more lightweight solution trade-off well?

@peterz_shu Thanks! It's not strictly an SEO tool, we built it as a bot traffic overview that any webdev, SEO specialist or site owner can use to see what's actually hitting their server. The SEO focus comes from the fact that we built it for our own SEO agency, so that's what's been most useful so far :)

As for GTM - it's flexible but requires serious setup and custom logic to even start classifying AI bots. Agent Monitor just works out of the box, essentially plug-and-play, and shows you the full picture: humans, AI bots, other bots, which pages they hit, how often.

Regarding the trade-off, across ~200 users so far, we've had almost no feedback about missing features. A few voices pointed to more detailed reporting, which we've already addressed. So the lightweight approach seems to cover what people actually need.

We're still early and shaping the product based on real user needs, so feedback is super welcome!

Agent Monitor

@peterz_shu Thanks! Great question.

Setup is really simple - just install a plugin for WordPress or PrestaShop, or follow a few easy steps to integrate via Cloudflare.

As for data, the app collects everything GTM would collect, essentially all server logs. For analyzing AI traffic that's more than enough. What we focus on is building a massive funnel, filtering out noise, categorizing, naming, and helping with interpretation. That's it, but that's also a lot. With GTM everyone would have to do all of this manually.

We also built something called URL Inspector - a simple tool for analyzing specific URLs. You can see if and how often a particular page was visited by AI bots, and which ones exactly. GTM probably won't give you tools like that.

Thanks again for the question. Did I answer it thoroughly? Any other questions? :)

WebWave

From Poland with love ;-)

Agent Monitor

Made in Poland, monitoring the whole world! Thanks for your support @janusz_mirowski :D

Agent Monitor

@janusz_mirowski Love it! Pierogi-powered development all the way 😄

Thanks for having this in a free two-week trial, @marcin_kaminski. Congrats on the launch!

Agent Monitor

@neilverma Thank you! Hope you enjoy testing it out.

Product Hunt

Agent Monitor

@curiouskitty Thanks. Great question!

Agent Monitor is an analytics tool focused mainly on monitoring AI traffic, classifying it precisely and helping interpret it.

Our app delivers more accurate and better classified data than Cloudflare. CF is good for quick integration with our app - the free version is enough. Both tools do different jobs and can't really be compared one to one. I recommend using both CF (security, caching, speed, dns...) and Agent Monitor (analyzing AI traffic).

GA4 focuses on people, our app focuses on bots with special attention to AI traffic. So in your tech stack you can place our app next to GA4. GA4 for humans, Agent Monitor for bots. GA4 answers the question: how many people visited my site. Agent Monitor answers the question: how many times did ChatGPT visit our site on behalf of people.

We also built a URL Inspector feature that helps interpret and analyze AI traffic on specific pages. This way you can find out if your content is being used by AI to answer people's questions, for deep research, etc.

To sum up, this is a different kind of app than the ones we've known so far. It's something new, something that didn't exist before - and it's hard to describe something completely new using old concepts.

So if you want to check if and how well your content is visible to AI, which pages are visited most often, or whether the new product collection you added to your store is being searched by people - but through ChatGPT and other assistants - our app makes that possible. This way you can optimize your content and your business and marketing decisions not just for people, but also for people using AI assistants.