doQment

Turn websites into ephemeral MCP servers! From docs, to code

66 followers

Turn websites into ephemeral MCP servers! From docs, to code

66 followers

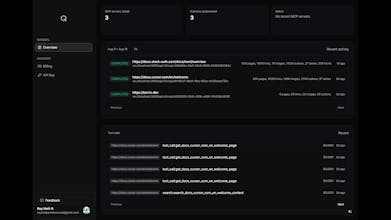

Transform any website into a comprehensive MCP-like information retrieval system for AI coding agents. - Create account - Crawl (rest or manually) - Connect and done. Free credits for new accounts as an experiment.

doQment

Magiclight

Congratulations on the launch!

Can you connect multiple websites into one MCP-like system simultaneously?

doQment

@jason123 You can have up to 3 live MCP servers and connect them all to your agent (Cursor, Codex, etc). Since crawls obtain a lot of information, a single 200 page - thousands of components crawl is enough for the models to interact with.

It seems that uniting more than one crawled domain into a single MCP under the same amount of tools would make it harder and more token consuming, and the least I want is to consume those precious thinking tokens. But the better models come out, the bigger the chance to expand on it.

So currently up to 3 MCPs, to multi-crawl MCPs. but could add it based on feedback, better context windows, testing.

doQment

More interesting info and launch tweet: https://x.com/reyneill_/status/1963885371546095692

"...1- It spins up a crawl to the desired website, not any regular one, I'm talking pages, sub-pages,

sub-domains, links, components, images, etc. Max settings could crawl up to hours

2- Creates an MCP server with 10 tools to provide your connected agent all-access to this data

3- Receive crawl updates in real-time, connect to @Cursor and @Codex by OpenAI with a single click, or the mcp_connection_info to attach any other agent like @Claude Code

PS: You can currently use it for free, as I'm offering free credits for new accounts to test @Vercel waters

Billing is pay per use which seemed the best way to offer it, and cost per operation is dramatically low.

You can crawl 200 pages sites for $0.001 per page.

Let me know if any issues + improvements via comments, emails or on my X DMs, will keep a tight feedback cycle"

Agnes AI

It is interesting... spawning your own MCP server on demand instead of endlessly copy-pasting docs is such a game-changer—I literally struggled with this on my last project..... Super clever solution, team @reyneill

what's the difference with websearch MCP?

doQment

@tibelf web search chains up the top X search results into an XML or similar format for LLMs and sometimes get content from the pages that pop up (at least the good ones)

It’s pretty different over trying to get relevant, tailored info from a documentation site or any other, like a human would when researching info for the LLM to apply, specially with knowledge out of its training, which is what doQment aims for

doQment

@tmtabor the crawl does the RAG for you, RAG will never go away, it will just be enhanced. The point of the product is to make the best RAG possible to substitute you doing the RAG for the LLM

Turning any site into a self-serve MCP for agents is such a smart workaround, feels like a cheat code for agent context problems. Already thinking of 3 domains I want to test this on.