Ollama

The easiest way to run large language models locally

5.0•25 reviews•1.1K followers

The easiest way to run large language models locally

5.0•25 reviews•1.1K followers

Run Llama 2 and other models on macOS, with Windows and Linux coming soon. Customize and create your own.

This is the 3rd launch from Ollama. View more

Ollama Desktop App

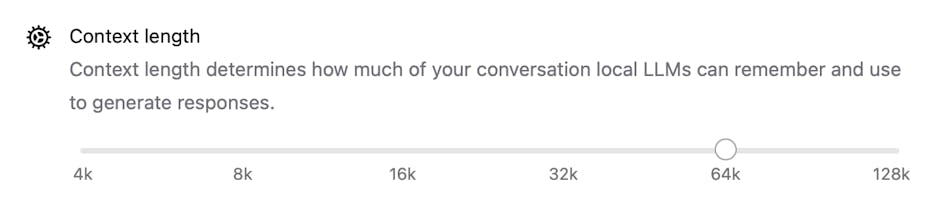

Ollama's new official desktop app for macOS and Windows makes it easy to run open-source models locally. Chat with LLMs, use multimodal models with images, or reason about files, all from a simple, private interface.

Free

Launch Team

AnythingLLM

AnythingLLM

AltPage.ai

No way—running top-tier vision models like Llama 4 locally is a total game-changer! My laptop always struggled before, so I’m seriously impressed by the new memory management.

Just tried Llama 4 locally with the new update, zero crashes, super responsive, and the new memory handling is 🔥. Huge leap from the CLI-only days.

Very interesting launch @zaczuo and @jmorgan maybe I have got the thesis wrong but I wonder what's the utility of this one for non coders like me. Although I love Claude code, is this one better?

Great product. This is huge for non-technical users! Any plans for model discovery/browsing within the app?

Ahey

I really love this app! It would be great if it included web search functionality.

Awesome Launch!!! Much useful! thank you very much for this ❤️

Anything that allows users run LLMs locally is just awesome in my book. I think everyone should have access to LLMs without the cost associated with the cloud as most people have basic needs to assist with their day to day tasks and don't have the requirements of a machine learning researcher. I do think integrated local LLMs within the operating systems will allow us to be more productive than cloud based ones in the future.

Good to see the progress on this!