clawsec

clawsec verifies AI agent skills before you install them

1 follower

clawsec verifies AI agent skills before you install them

1 follower

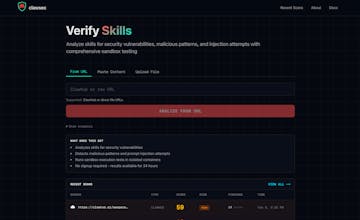

clawsec verifies AI agent skills before you install them. Our multi-stage pipeline - YARA pattern detection, LLM semantic analysis, and false positive filtering - catches prompt injections, credential harvesting, and hidden malicious code. Paste a skill, get a trust score. No signup needed.

Free

Launch Team / Built With

Anima - OnBrand Vibe Coding — Design-aware AI for modern product teams.

Design-aware AI for modern product teams.

Promoted

Maker

📌Report