Papercuts

Deploy AI agents to use your production app like a real user

92 followers

Deploy AI agents to use your production app like a real user

92 followers

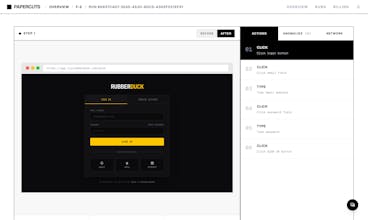

Deploy AI agents that flow through your production app like a real user. Just provide a URL and get notified when something breaks.

Macky

Curatora

@sayuj_suresh DOM-based tests miss real user pain all the time. Testing with agents that actually see and navigate the UI feels like the natural next step for modern apps.

I am seeing the same shift while building Curatora. Systems that observe real outcomes, not just internal states, catch issues much earlier. Curious how teams adopt this in production.

Macky

@imtiyazmohammed Totally agree, that’s been my experience as well. DOM-based tests are great for verifying assumptions, but they often miss how the product actually feels to a user.

We’re seeing teams adopt this gradually starting with a few critical flows in production and using agents as signal alongside existing monitoring.

@sayuj_suresh Testing from the user’s point of view is where most hidden state and regressions finally surface.

We’ve seen similar blind spots appear once agents interact with real UIs and workflows while building GTWY.

Product Hunt

Macky

@curiouskitty Great question. i don’t see Papercuts as replacing Playwright/Cypress or APM/RUM they solve different problems.

Scripted tests verify expected behavior in controlled environments, and APM/RUM tell you when something is already broken for real users. Papercuts sits in between: agents continuously exercise real production flows and catch UX and logic regressions before they show up in dashboards or support tickets.

The usual trigger is when teams realise tests are green, metrics look fine, but users still hit papercuts , broken edge cases, conditional flows, or subtle UI regressions that no one explicitly tested for. That’s where adding agents starts paying off fast.

Love the vision-based approach here! Traditional DOM selectors are indeed brittle and miss the actual user experience. The fact that your agents can handle dynamic forms and conditional logic without hardcoded selectors is exactly what production testing needs.

@sayuj_suresh Your point about tests being "blind" resonates strongly. We've seen this pattern where CI is green but users hit real issues in production. Vision-based agents that can adapt to UI changes are the future of testing.

One question: How do you handle authentication flows and state management across test runs? For complex SaaS apps with multi-tenant architectures, maintaining proper test isolation while simulating real user sessions can be tricky.

Testing with real user flows is where hidden state and edge cases finally show up.

We’ve seen how valuable that signal becomes once agents interact with production systems while building GTWY.