NexaSDK for Mobile

Easiest solution to deploy multimodal AI to mobile

654 followers

Easiest solution to deploy multimodal AI to mobile

654 followers

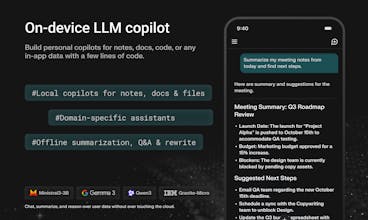

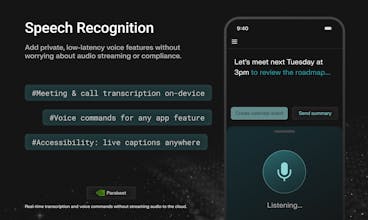

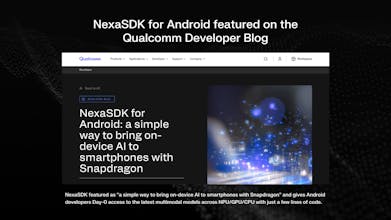

NexaSDK for Mobile lets developers use the latest multimodal AI models fully on-device on iOS & Android apps with Apple Neural Engine and Snapdragon NPU acceleration. In just 3 lines of code, build chat, multimodal, search, and audio features with no cloud cost, complete privacy, 2x faster speed and 9× better energy efficiency.

NexaSDK for Mobile

Hey Product Hunt — I’m Zack Li, CTO and co-founder of Nexa AI 👋

We built NexaSDK for Mobile after watching too many mobile app development teams hit the same wall: the best AI experiences want to use your users’ real context (notes, photos, docs, in-app data)… but pushing that to the cloud is slow, expensive, and uncomfortable from a privacy standpoint. Going fully on-device is the obvious answer — until you try to ship it across iOS + Android with modern multimodal models.

NexaSDK for Mobile is our “make on-device AI shippable” kit. It lets you run state-of-the-art models locally across text + vision + audio with a single SDK, and it’s designed to use the phone’s NPU (the dedicated AI engine) so you get ~2× faster inference and ~9× better energy efficiency — which matters because battery life is important.

What you can build quickly:

On-device LLM copilots over user data (messages/notes/files) — private by default

Multimodal understanding (what’s on screen / in camera frames) fully offline

Speech recognition for low-latency transcription & voice commands

Plus: no cloud API cost, day-0 model support, and one SDK across iOS/Android

Try today at: https://sdk.nexa.ai/mobile, I’d love your real feedback:

What’s the first on-device feature you’d ship if it was easy?

What’s your biggest blocker today — model support, UX patterns, or performance/battery?

NexaSDK for Mobile

@zack_learner We look forward to hearing everyone's feedback! Feel free to ask us any questions.

@zack_learner How does NexaSDK handle different NPUs across devices? Is performance consistent on older phones too?

NexaSDK for Mobile

@zack_learner @masump Great question. NexaSDK uses our NexaML runtime as an abstraction layer: at runtime we detect the device’s available accelerators (Apple Neural Engine / Snapdragon NPU / GPU / CPU) and route each model/operator to the best backend for that device. Same app code — the SDK handles the hardware differences.

On older phones, performance won’t be identical (hardware is the bottleneck), but it’s predictable: we automatically fall back to GPU/CPU when an NPU isn’t available

NexaSDK for Mobile

@masump We support Android with Snapdragon Gen4 NPU (SAMSUNG S25) IOS & MacOS (iPhone 12+). The performance is consistent from all compatible devices. For those devices without NPU support, you can also use the GPU & CPU version of SDK.

Gainy

@zack_learner Congrats! Very anticipated. Been waiting for such SDK with dynamic LLM loading. Please add a text/image generation examples in Docs for Qwen in example. Will be very popular.

NexaSDK for Mobile

@anton_gubarenko Yes, we have supported Qwen model, see: https://docs.nexa.ai/nexa-sdk-android/overview#supported-models

Qwen3-4B is supoprted for PC/mobile, and Qwen3VL is supported for PC

Jinna.ai

Local AI modals are definitely the future! Wondering how do you price this product. Is it free? Because pricing stuff running locally is quite tricky.

NexaSDK for Mobile

@nikitaeverywhere Yes, nexaSDK is free, we only charge for large enterprise adoption for NPU inference.

NexaSDK for Mobile

@nikitaeverywhere Yes this product is free for you to use! We believe local AI will be in every device in the future. Please feel free to let me know your feedback.

DeepTagger

Very impressive! So, you re-package models to make them compatible with different devices? What is `NEXA_TOKEN` needed for? Maybe you could quickly explain how does it work and which models are available?

NexaSDK for Mobile

NexaSDK for Mobile

@avloss We have our internal convert pipeline and quantization algorithm make model compatible for difference devices. For NPU inference usages on PC, `NEXA_TOKEN` is needed for 1st time to validate the device, since NPU inference is only free for individual developers.

Nexa SDK

On-device is clearly the right answer for anything touching real user context — notes, messages, photos, screen content. Curious to see how teams use this for:

screen-aware copilots

offline multimodal assistants

privacy-sensitive workflows

...

NexaSDK for Mobile

@llnx Exactly! On-device AI will be powering every app by 2030!

NexaSDK for Mobile

@llnx I cannot agree more, thanks

FuseBase

Nice work team👏How deeply you integrate with Apple’s NPU - is this Core ML–based or a custom runtime?

NexaSDK for Mobile

NexaSDK for Mobile

@kate_ramakaieva We use customed pipeline and build our inference engine ourselves. We leveraged some low-level APIs in coreML only.

Fish Audio

NexaSDK for Mobile

NexaSDK for Mobile

@hehe6z Thank you and look forward to more feedbacks!

GNGM

What types of models are supported today (e.g., language, vision, speech), and how easy is it to bring your own model?

NexaSDK for Mobile

@polman_trudo We support language (LLM models), vision (VLM, CV models), speech (ASR models). Our SDK has converter to support bringing your own model for enterprise customers.

NexaSDK for Mobile

@polman_trudo We support almost all model types and tasks: vision, language, ASR, embedding models. NexaSDK is the only SDK that supports latest, state-of-the-art models on NPU, GPU, CPU. It is easy to bring your own model as we will release an easy-to-use converter tool soon.