NeuroGrid

Turn idle GPUs into cash. Get affordable AI for everyone.

40 followers

Turn idle GPUs into cash. Get affordable AI for everyone.

40 followers

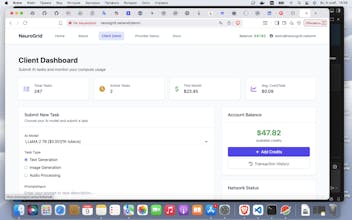

NeuroGrid: Get GPU-powered AI inference at a fraction of the cost. Tired of crazy cloud bills? We’ve built a decentralized network that taps into idle GPUs worldwide. Run popular open-source models like LLaMA and Stable Diffusion via a simple API. It's fast, secure, and up to 80% cheaper than hyperscalers. 🚀 Special for PH: Get 1,000 free inference tasks + a lifetime 15% discount.

Govar – English speaking app

Congrats on the launch! Awesome product, exactly what we were looking for!

@dzianis_yatsenka Thanck you

Swytchcode

Yes, people really get crazy cloud bills. Would love to try decentralized network.

Is it as fast and reliable as traditional services?

Would love to connect and know more

@chilarai The speed will depend on how many companies will provide their free resources

Swytchcode

@mikalai Nice, would love to connect with you, if you are open for mutual marketing partnerships :)

@chilaraiwe can connect.

Swytchcode

@mikalai If you can send me a linkedin message or connect request, I'll be happy to discuss

Founder here - AMA! + Launch Offers Inside

Hey Hunters! I'm Mikalai, founder of NeuroGrid. We're super excited to share what we've been building.

The Problem We're Solving:

GPU costs are insane, blocking innovation. Meanwhile, millions of powerful GPUs sit idle. It's a broken system.

Our Solution:

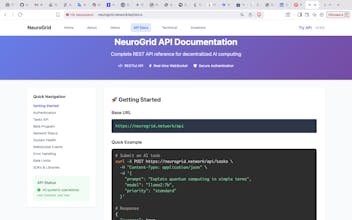

NeuroGrid is a decentralized network that connects these idle GPUs to developers who need affordable AI inference. Think Airbnb, but for AI compute power.

Exclusive Product Hunt Launch Offers:

* For Developers: Get 1,000 FREE inference tasks to start + a lifetime 15% discount.

* For GPU Owners: Earn double rewards for your first month + a special "Early Adopter" badge.

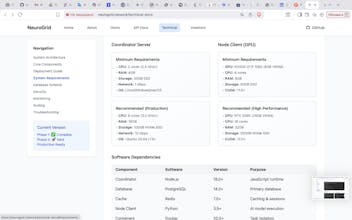

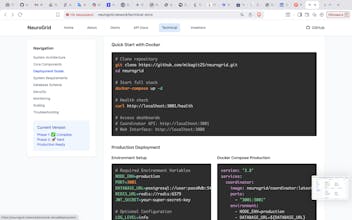

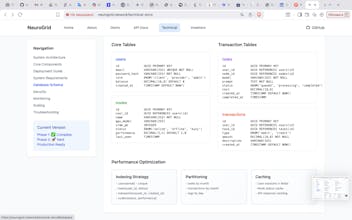

Our Tech Stack:

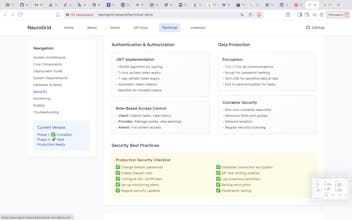

Node.js, Python, Docker, PostgreSQL. Models run in secure, isolated containers.

We'd love your feedback:

* What's the biggest pain point for you with AI inference costs?

* What models would you most want to see supported next?

* Any features you'd love us to build?

We're here all day - Ask Me Anything!