Allen Institute of Artificial Intelligence

AI for the Common Good.

163 followers

AI for the Common Good.

163 followers

Objaverse is A massive dataset with 800k+ annotated 3D objects. Objaverse improves upon present day 3D repositories in terms of scale, number of categories, and in the visual diversity of instances within a category.

This is the 7th launch from Allen Institute of Artificial Intelligence. View more

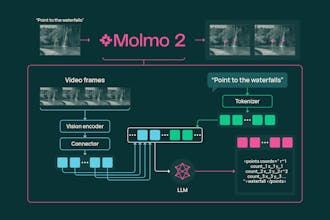

Molmo 2

Molmo 2, a new suite of state-of-the-art vision-language models with open weights, training data, and training code, can analyze videos and multiple images at once.

Free

Launch Team

Flowtica Scribe

Hi everyone!

Ai2 is back with a massive upgrade. If you liked the original Molmo for images, you are going to love this. Molmo 2 brings that same "pointing" capability to video.

The coolest part is how it handles Space + Time. You don't just get a text summary, you get exact timestamps and coordinates. Ask it "how many times did the ball hit the ground?" and it points to every single instance.

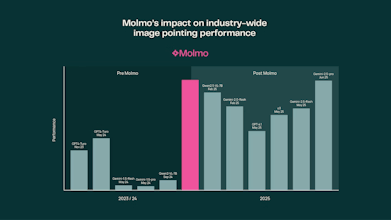

It reportedly outperforms Gemini 3 Pro in video tracking🤯, all while being trained on less than 1/8th of the data Meta used for PerceptionLM. That is some serious efficiency.

Congrats on the launch! Objaverse is an impressive contribution to the 3D and AI ecosystem. The scale and visual diversity of 800k+ annotated objects really stand out compared to existing repositories. This feels especially valuable for advancing embodied AI, robotics, and 3D generation research. Curious how you see the community contributing back or extending the dataset over time.

GraphBit

What’s impressive here isn’t just the benchmark gains, but the form of the output. Grounding language to precise space, time coordinates is what turns video understanding from analysis into something actionable. Open weights plus this level of temporal and spatial fidelity feels like a real step toward usable perception systems, not just better demos.