Theneo is an AI tool that generates Stripe-like API docs. Our ML models take care of tedious manual work in generating and publishing API docs, making them much easier to produce—less technically demanding for writers and less writing-intensive for developers.

This is the 3rd launch from Theneo. View more

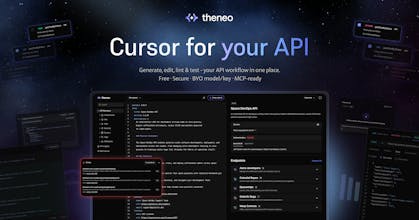

Cursor for your API

Go from idea to tested API fast. Generate or import OpenAPI, edit with AI, lint, preview docs, and run calls in one place. Insights highlight Design/DX/Security and AI-readiness. Privacy-first and secure with your own model/key. One-click MCP export.

Free

Launch Team / Built With

Theneo

Scrumball

@ana_robakidze The browser-based approach with local processing is smart for security-sensitive API work. Most developers are paranoid about uploading internal specs to external services.

How does the AI handle complex nested schemas or circular references? Those are usually where automated OpenAPI generation breaks down and produces specs that validate but don't actually represent the real API behavior.

Also curious about the MCP export functionality - can it generate agent-compatible schemas for APIs that weren't originally designed with LLM consumption in mind, or does it mainly work for straightforward REST endpoints?

Theneo

@alex_chu821 great questions, so we handle complex nested schemas and circular references through our intelligent cycle detection and fallback strategies. When it encounters circular dependencies, it gracefully falls back to generic types to prevent infinite loops while maintaining schema validity.

The system addresses this through multiple layers: AI prompts specifically designed to generate realistic, production-ready specs (not just valid ones), Spectral linting rules that enforce real-world best practices, and comprehensive testing against actual API scenarios.

In regards to the MCP export functionality, it is designed to work with any REST API, regardless of whether it was originally built for LLM consumption. It converts complex OpenAPI schemas to agent-compatible formats, handles various authentication schemes, and includes filtering options to exclude problematic endpoints. It's particularly good at taking legacy or inconsistent APIs and making them work with modern AI agents.

cc ing @saba_gventsadze our amazing engineer who did a lot of work on this

Scrumball

@saba_gventsadze @ana_robakidze The cycle detection with graceful fallback to generic types is a solid approach. That's exactly where most schema generators fail - they either crash or create infinite loops instead of handling circular dependencies intelligently.

The multi-layer validation approach sounds comprehensive. The distinction between 'valid' and 'production-ready' specs is crucial - we've seen too many auto-generated APIs that technically validate but are unusable in practice.

Thanks for the technical breakdown. Definitely going to give the MCP export feature a test run with some of our legacy API documentation.

@ana_robakidze @alex_chu821 Thanks for looping me in! 🙌 A big focus for me while building this was making sure we don’t just “stop at validity.” Like you mentioned, specs that technically validate but don’t model the real behavior cause more headaches than they solve. For circular references, the fallback to generics is just the safety net - but in many cases, we can actually preserve the intent of the schema by restructuring it or introducing reusable components ($refs) intelligently. That way, you still get meaningful models instead of everything collapsing into object. On the MCP export side, we wanted it to be useful even when working with “messy” APIs - the kind with inconsistent naming, mixed styles, or half-documented auth flows. The export pipeline normalizes those quirks and makes sure the resulting schema is something agents can consume without brittle workarounds.

@ana_robakidze congratulations on the launch! 👏🏻👏🏻👏🏻

Theneo

@theano_dimitrakis1 thanks Theano, great name

Theneo

@theano_dimitrakis1 Thank you Theano, for supporting Theneo, haha!!

Andi

@ana_robakidze This is such an awesomely useful idea!! We're working with an API in private beta atm and this is just what I need to get together everything for it. Excited to try it out!

Theneo

@jem_white thank you so much for your support! We hope you will enjoy using the product, looking forward to hearing your feedback !

Onyx

I wrote our docs in Mintlify + Cursor + Claude Code. Our OpenAPI was a mess since it's auto-generated from our FastAPI backend. However, I think ~5 prompts to Cursor/CC was enough for publishing. Does this add to that flow? Maybe this isn't meant for docs built with Mintlify?

Reference: https://docs.onyx.app

Theneo

@wenxi great question, so our main product is a competitor to Mintlify, we were in the same YC batch also,:D, you can have the same docs as you have in mintlify without having to go through all the pain you have mentioned, you can use our doccs platform + the api editor seamlessly together. We can share more details and lets discuss if this would make your flow better.

I am curious, i read the description that there is no database and nothing is saved, how does this work then?

Theneo

@salome_demetrashvili Hey Salome, great question, we are only doing In-browser processing

all the feature run entirely in your browser. Temporary data can live in memory or optional local storage on your device, not on our servers. I am cc'ing our amazing engineer @saba_gventsadze who will share even more details

@salome_demetrashvili @ana_robakidze Hey! Thanks for the question. As one of the engineers who built Theneo API editor, I can tell you that our local data persistence system is actually one of the sophisticated parts of our codebase. We use a multi-layered approach where the main editor content gets auto-saved to a local storage under a certain key within a few seconds of delay to avoid performance issues. As for little more complex states, like AI settings, rules and chat history, are managed through Zustand stores with middlewares that automatically sync data with local storage.

@ana_robakidze @saba_gventsadze

Thank you for the comprehensive answers! Could you also tell me about API keys? I see this option in settings – how are they stored, and for how long?

@ana_robakidze @salome_demetrashvili We allow our users to use their own API keys for AI usage of our editor. To have it securely implemented, we use encryption with an user provided passphrase which is prompted upon adding your API key. Then there is more encryption which consists of device-specific pepper key derived from the browser characteristics. So even if someone gains access to your local storage, they would need not only your passphrase, but also the specific device and other layers of encryption to get ahold of your API key. The keys are indefinitely stored in your local storage, unless you clear it specifically or just reset your settings.

We've been using Theneo for 3+ years already. Absolutely love the product and the team!

Theneo

@givib as always thanks Givi and amazing team at Klipy for your support

Thrilled to finally share what our team has built! 💖

I got to shape the design and experience ✨ Can’t wait to hear what you think

Theneo

@natia_khitarishvili2 without your magic, we would be doomed, thanks for all of your hard work, you are simply wizard.

Really like this approach! The fact that everything runs in the browser and nothing is stored makes it feel both fast and secure. One thing I’m curious about: how well does the AI handle edge cases in larger, messy OpenAPI specs? I imagine a lot of teams will throw pretty complex files at it.

Congrats on the launch 🚀 API docs are one of those invisible pain points that compound over time. I love how Theneo tackles the time sink, but I’m curious how you’re approaching trust & accuracy at scale. Tools like Mintlify/ReadMe still struggle with keeping examples current, would “verified by engineer” badges or schema import (OpenAPI/Postman) be on your roadmap? That feels like a game-changer for reducing the dreaded “it worked in docs but failed in prod” tickets.

Theneo

@abhishek_dhama Abhishek good points, this release though isnt just about docs, its more about API design, api changes, insights and mcp. So bringing all the workflow in one place