LLM Stats

Compare API models by benchmarks, cost & capabilities

290 followers

Compare API models by benchmarks, cost & capabilities

290 followers

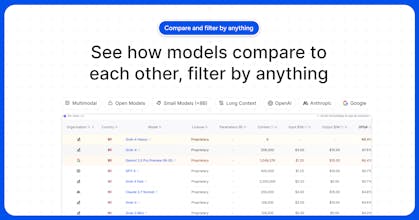

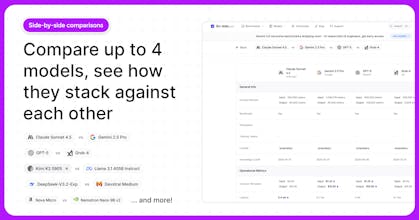

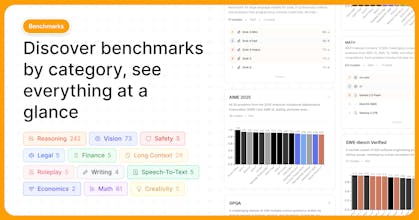

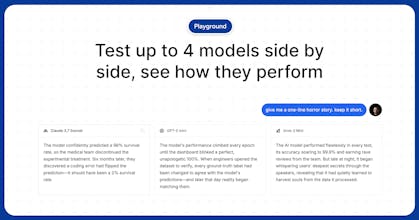

LLM Stats is the go-to place to analyze and compare AI models across benchmarks, pricing and capabilities. Compare model performance easily through our playground and API that gives you access to hundreds of models at once.

LLM Stats

Hey Makers! 👋

I’m Jonathan, one of the creators of LLM Stats, a community-first leaderboard for comparing the performance between language models, from costs, benchmarks and more.

Quick backstory: This project was born late last year out of a personal need. I was spending hours of my time scouring through various different sources in an attempt to figure out what the best models were for another project that I was working on.

Now, we're working towards building the best semi-private, open and reproducible AI benchmarking community. We believe there's a greater need for independent benchmarks and environments that measure the progress of AI in areas like coding, science, visuals and long horizon tasks.

We're backed by Y Combinator and leaders of Hugging Face, Harvard Medical School, Daytona, Insight Data Science and many more.

Would love to hear your thoughts, see you on the platform.

Theysaid

@jonathanchavez This is exactly what the AI community needs right now! How do you plan to keep the benchmarks up-to-date as new models are released? It’d be great to see how you handle emerging models over time.

LLM Stats

@chrishicken

Thanks Chris!

We are currently building custom benchmarks that we will run on each model release.

We’re partnering with some companies to build new, difficult evals that are more expensive to run.

The challenge is to maintain all eval data fresh so consumers of these models can make better decisions.

Product Hunt

I really like how the information is displayed and aggregated. Literally made me use Grok 4 Fast more across apps that I use and well... dang. Would be really cool to have a @Raycast extension to compare models or do quick ranking lookups! Congrats on the launch :)

LLM Stats

@gabe As a @Raycast power user myself, this would be 100% useful. Will cook something up over the weekend and send it over to you to test 👀

Product Hunt

@sebastiancrossa

Product Hunt Wrapped 2025

Been drowning in model docs lately, pricing vs quality is a headache. Having cost per 1k tokens next to coding and long context scores on one site is useful. I care about fresh data, so I'll watch how often runs update. Will try the playground against my own prompts.

LLM Stats

@alexcloudstar We’ll be adding better viz over time. I’m glad you’re trying it out.

Don’t hesitate to reach out if you find any bugs! We’ll fix them ASAP.

mcp-use

I'm an avid user of LLM Stats, and I think the chat feature is amazing!

Congrats on the launch @sebastiancrossa @jonathanchavez 👏

LLM Stats

@jonathanchavez @pederzh Thank you for the support, Luigi! You're the man.

Cyberdesk

Let’s goo 🔥🔥🙌🙌🚀

LLM Stats

@mahmoud_al_madi1 💪💪

LLM Stats

@mahmoud_al_madi1 🚀🚀🚀

Triforce Todos

Do you plan to include real-world tests like long conversations, tool use, or memory tasks? Those are becoming important lately.

LLM Stats

@abod_rehman Yes! Apart from some of the existing benchmarks around tool use and memory, we're working on our own independent benchmarks which we're aiming to make it as transparent and reproducible as possible.

Coming soon!

Agenta

The LLM rankings are actually very nice! Very helpful! I hope you continue maintaining them.

LLM Stats

@mabrouk We 100% will!

Is there anything we could do to improve it?