Launched this week

Kalynt

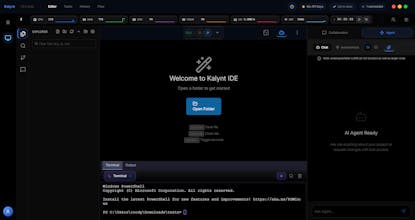

Local AI IDE. Your code stays yours.

3 followers

Local AI IDE. Your code stays yours.

3 followers

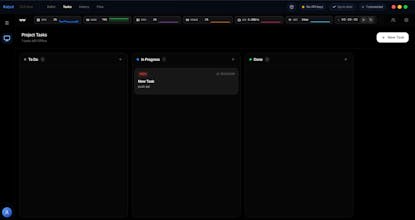

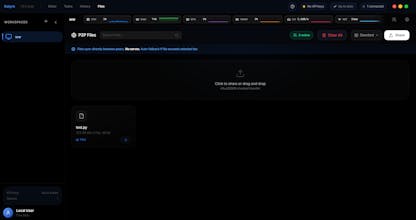

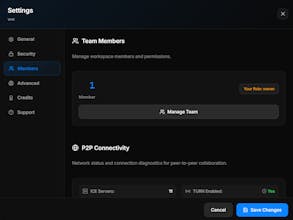

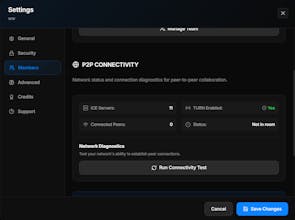

Kalynt is a privacy-first AI IDE that runs entirely on your machine. Your code never leaves your computer unless you explicitly choose. Unlike Cursor or GitHub Copilot, Kalynt runs local LLMs (Qwen, Devstral 24B) directly on your hardware – even on 8GB RAM. Real-time P2P collaboration uses encrypted signals, not cloud servers. Built with AIME (context management engine), CRDTs, WebRTC. Open-core (AGPL-3.0). Beta v1.0 – shipping fast, iterating based on feedback.

Free

Launch Team / Built With

Flowstep — Generate real UI in seconds

Generate real UI in seconds

Promoted

Maker

📌Report