Ship AI that works, not AI that "feels right"

🛠 Prototype high-quality prompts and Agents from our UI or your codebase.

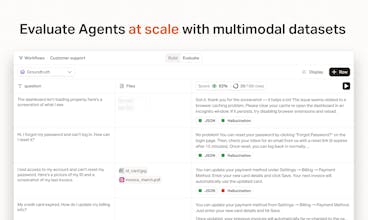

✅ Test & Evaluate LLM outputs through a dataset of scenarios.

🚀 Deploy AI features confidently with our SDK.

📊 Monitor & Optimize your AI with automated tracking & insights.

💡 Collaborate with your team for better AI-driven products.

No more guesswork—just better AI, faster. 🔗

This is the 2nd launch from Basalt. View more

Basalt Agents

Basalt, #1 AI observability tool for teams, is launching its brand new Agent Builder : prototype, test, and deploy complex AI flows composed of multiple prompts, and run them through a dataset of scenarios.

Free Options

Launch Team / Built With

Blocks

Guys ! so happy to have you back on Product Hunt, the platform has powered up so much congrats !

Basalt

@vicgrss thanks Vic !!

Triforce Todos

This looks amazing, François! 👀 How does the evaluation part work across different models?

UI Bakery

Great launch! How does Basalt prevent overfitting on the evaluation dataset — e.g. if prompts start optimizing too much to test cases and lose generality?

Basalt

@vladimir_lugovsky great question !! To avoid overfitting, we recommend creating a dynamic dataset, meaning that you continuously enrich it with new test cases from your logs (something you can do from Basalt or programmatically) !

Happy to discover the product! No more excel sheets for evals ;))

Basalt

@steffanb exactly !! Thanks :)

Congrats on the launch! Curious if there's any example workflow with multimodal (video, speech) results?

This looks solid! The evaluation-first approach is exactly what's needed. Curious how you handle workflows that need human approval gates between steps, not just eval metrics, but hard stops for review before continuing?