Launched this week

Hyta

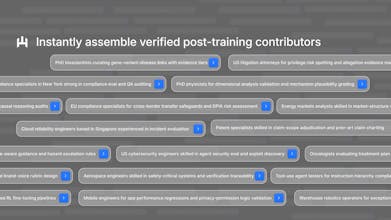

Forge your elite AI training force

759 followers

Forge your elite AI training force

759 followers

Hyta powers AI post-training teams with always-on pipelines of high-quality specialized human intelligence and trusted signal tracking, so frontier capabilities can compound across industries.

Hyta

Hyta powers AI post-training teams with always-on pipelines of high-quality specialized human intelligence and trusted signal tracking, so frontier capabilities can compound across industries.

Really resonate with this framing. The shift from post-training as a one-off project to something continuous and org-specific feels inevitable, especially as models are embedded deeper into real workflows.

Huge congrats on the launch — love how Hyta is systematizing trusted, high-signal human feedback for AI post-training at scale.

Thanks a lot!

Yes totally agree!

This feels like one of those inflection points where the process matters more than the artifact. The teams that internalize that early will likely pull ahead over time.

Awesome launch

Quick question , after today's Product Hunt traffic , how are you planning to build early user reviews and long-term credibility

Interesting framing on post-training as continuous rather than a one-off. We've been using AI heavily in building our product and the "trust reset" problem is real - every time you switch contexts or models, institutional knowledge gets lost. How do you think about the quality-speed tradeoff? The most valuable feedback often comes from domain experts who are also the busiest.

Interesting product. We use memory for this. And we have two types of memory: one within the chat and one across the entire account to personalize future chats. By the way, in Europe this isn’t simple — you need to comply with GDPR )

Persistent contributor profiles across training runs is the part that usually breaks down. Most teams rebuild annotator pools from scratch each project because there's no portable trust layer. If Hyta lets domain experts compound reputation across orgs and model versions, that solves a real coordination problem. Would be interesting to see how you handle disagreements between high-trust raters on ambiguous samples.