EmotionSense Pro

Privacy-first emotion AI for Google Meet in real time

137 followers

Privacy-first emotion AI for Google Meet in real time

137 followers

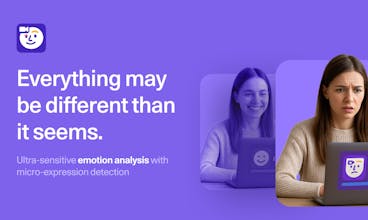

EmotionSense Pro is a Chrome extension that detects real-time emotions in Google Meet using AI—without sending data to servers. Ideal for UX researchers, recruiters, and educators seeking ethical, local emotion analytics.

EmotionSense Pro

RightNow AI

EmotionSense Pro

@jaber23 Haha, that might be the boldest use case we’ve heard so far! 😄

minimalist phone: creating folders

@jaber23 :D LOL

Next3 Offload

EmotionSense Pro

@shahriardgm great question,

EmotionSense Pro currently focuses on facial microexpressions and vocal tone, rather than text-based analysis, and is designed to read non-verbal emotional signals during live one-on-one calls.

Mixed emotions and subtle cues are indeed challenging, but we prioritise real-time shifts and micro-changes, which help identify moments of hesitation, confusion, or engagement. We’re not claiming 100% accuracy (emotion is nuanced!), but the goal is insight over judgment, helping professionals get a richer picture of what’s happening beyond words.

Any way to measure how accurate is it? I'm wondering if different people may show emotions differently.

EmotionSense Pro

@bhrigu_sr Great question! That’s why EmotionSense Pro doesn’t just rely on one standard model. It focuses on small facial movements and tone patterns that are rooted in human anatomy. Instead of guessing based on generalizations, it looks at what’s actually happening in real time.

That said, it’s not claiming 100% accuracy (no emotion AI tool should). The goal is to offer an extra layer of insight during interviews or meetings -> not to replace human judgment, but to augment it.

We’re working on making results more transparent over time, including confidence levels and session feedback.

Thanks for bringing up such an important point!

I like a lot the idea — in what concrete cases we could use it? best

EmotionSense Pro

@codewithguillaume Thanks! That means a lot. One of the things we’ve noticed is how often people miss subtle emotional cues in virtual calls, especially during interviews, demos, sales, or even just mentoring chats..

and then EmotionSense Pro helps surface those signals so you can better understand how the other person is feeling in the moment. It’s been surprisingly helpful in those everyday conversations where tone and body language are easy to overlook. Curious to see where people take it.

Looks good. Does it also take into account cultural differences ...due to which an expression or tone could have a different meaning?

EmotionSense Pro

@manu_goel2 That’s a really important point. EmotionSense Pro is trained on micro expressions rooted in human facial anatomy (like a Duchenne smile or brow furrowing), which are generally consistent across cultures due to shared muscle structures.

Additionally, interpretation can still vary based on social context, tone, or cultural norms. While our model captures the physical cues reliably, we’re actively working on refining contextual awareness, potentially allowing region-specific calibration in future updates. It’s a delicate balance between universal cues and cultural nuance, and we’re taking a research-informed approach to evolve it responsibly.

@agshin_rajabov Agree!

I would like to know if this plugin can be applied in non-google meet meetings? I have a training and learning scenario, and when users practice by recording videos, if we can obtain the user's emotional indicators, I believe this would be very helpful for the user's learning. I am also curious about the pricing model of this feature.

EmotionSense Pro

@new_user__14520238f9d2eb1a0d98a2c Good question!

Currently, EmotionSense Pro is built specifically for Google Meet as a Chrome extension, leveraging the structure and permissions of Meet calls to analyze emotions in real time.

Non-Google Meet usage (like video recordings or other platforms) -> this is on our roadmap. we’re actively working on support for Zoom and Microsoft Teams as the next integrations. 🙌