Launching today

Yavy

Turn any website into an MCP server for AI

59 followers

Turn any website into an MCP server for AI

59 followers

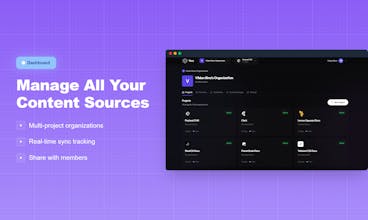

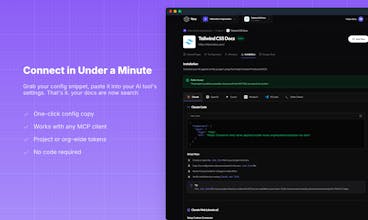

Yavy turns any public website into an MCP server. Paste a URL, and we crawl, index, and serve your content to AI tools like Claude, Cursor, and any MCP-compatible assistant. No more copy-pasting docs into chat. No more hallucinated answers. Your AI gets accurate, up-to-date information from your actual content. Perfect for developer docs, help centers, blogs, and knowledge bases. Set up in minutes - no code required. Organize multiple sources and share access with your team.

Free Options

Launch Team / Built With

Yavy

this is super cool, i've been dealing with mcp hell lately for my own startup and honestly the setup process is brutal. curious how you guys are handling the crawling and indexing - are you doing real-time updates when sites change or is it more of a snapshot thing? also wondering about rate limits and how you deal with sites that don't want to be crawled. we've been trying to connect our agents to docs and wikis and it's way harder than it should be, so really excited to see someone tackling this properly. congrats on the launch!

Yavy

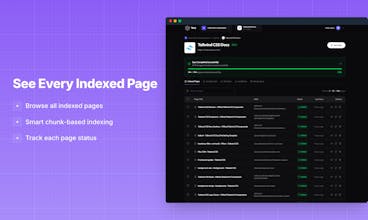

@victor_eth really good question, regarding crawling, staleness-based snapshots, not real-time. Each page has a refresh frequency (daily by default). We only re-crawl what's actually stale and skip re-indexing if content hash hasn't changed. Regarding rate limits, 100ms delays between requests, max 5 concurrent jobs per project, depth/URL caps and we identify ourselves with a custom User-Agent. Regarding robots.txt, yes, we respect it. We check for Sitemap directives first and prefer using the site's own sitemap over recursive crawling

Would love to hear more about your use case!

Cloudthread

Very cool! Will it automatically collect all sub directories content (e.g. from parent docs page, collect content of all docs)? This is huge pain manually

Yavy