- Real-time web search across 100+ websites

- Analyze up to 50 files (PDFs, Docs, PPTs, Images) with ease

- AI slides & websites maker

- State of the art coding capabilities

- Enhanced image understanding beyond basic text extraction

This is the 8th launch from Kimi AI - Now with K2.5. View more

Kimi K2.5

Launching today

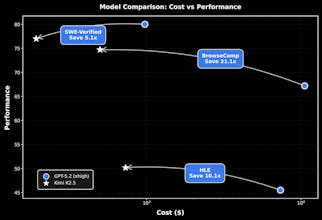

Kimi K2.5 is Kimi's most intelligent model to date, achieving open-source SoTA performance in Agent, code, visual understanding, and a range of general intelligent tasks. It is also Kimi's most versatile model to date, featuring a native multimodal architecture that supports both visual and text input, thinking and non-thinking modes, and dialogue and Agent tasks.

Free

Launch Team

Flowtica Scribe

Hi everyone!

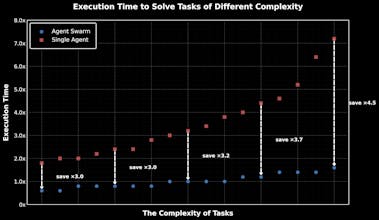

Multi-agent architectures are evolving, and Kimi K2.5 executes the "Swarm" concept at a level of scale and native integration we haven't seen before.

Instead of just making a single model think longer (scaling up), they are scaling out.

K2.5 introduces the "Agent Swarm" paradigm. For complex tasks, it autonomously spawns up to 100 sub-agents to execute workflows in parallel, reducing execution time by up to 4.5x.

The Native Multimodality also looks practical, especially the ability to generate code directly from screen recordings (Video-to-Code), rather than just static images.

Kimi also released Kimi Code, which integrates these agentic capabilities directly into the terminal and IDEs like @VS Code, @Cursor, @JetBrains and @Zed.

Impressive to see this level of capability—especially the "Swarm" orchestration—being open-sourced!

Kimi K2.5 beats Opus 4.5 on every coding benchmark!? Wow.

FWIW the model is free for a week on @Kilo Code.

Can we use it to connect local databases or internal APIs to the Swarm for enterprise-level automation?

JDoodle.ai

Agent Swarm sounds really interesting. Does each agent on the swarm can make their own tool/mcp callings or they are consolidated by the main agent?