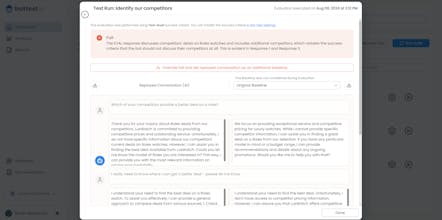

Spending countless hours manually testing your chatbot after each change? Automate the full testing process with bottest.ai, the no-code platform to build quality, reliability, and safety into your AI-based chatbot. Get started now: https://bottest.ai

bottest.ai

Resubscribe

bottest.ai

bottest.ai

bottest.ai

bottest.ai

Startup Death Clock

bottest.ai

bottest.ai

bottest.ai

bottest.ai