AletheionGuard

Detect LLM hallucinations before your users do

7 followers

Detect LLM hallucinations before your users do

7 followers

# 🛡️ Stop shipping AI features that hallucinate AletheionGuard is an API that detects when large language models (LLMs) generate unreliable or incorrect information. We call this "hallucination detection."

Hey ProductHunt! 👋 Founder here.

Your LLM just told a user that Mars has a capital city.

By the time your monitoring tool alerts you, 10,000 users saw it. 💥

We built the circuit breaker that prevents this.

━━━━━━━━━━━━━━━━━━━━━━━━━━━━

🎯 What is AletheionGuard?

Real-time epistemic safety layer for ANY LLM.

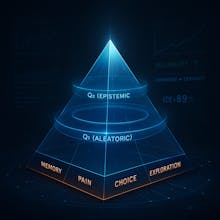

We analyze outputs BEFORE they reach users:

- Q1: Data uncertainty (irreducible noise)

- Q2: Model uncertainty (knowledge gaps)

High Q2 = model is guessing → 🛑 BLOCK

Low Q2 = model is confident → ✅ ALLOW

Works with: OpenAI • Anthropic • Llama • Gemini • Your custom model

━━━━━━━━━━━━━━━━━━━━━━━━━━━━

🎮 Try it now (no signup):

→ HuggingFace: https://huggingface.co/spaces/gnai-creator/AletheionGuard

→ Docs: https://aletheionguard.com/docs

⚡ Integrate in 5 lines:

━━━━━━━━━━━━━━━━━━━━━━━━━━━━

🎯 Perfect for:

- Healthcare AI (liability reduction)

- Legal tech (accuracy requirements)

- Financial advisors (compliance)

- Customer support (escalate uncertainty)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━

📚 Built on 43 pages of research:

https://doi.org/10.13140/RG.2.2.35778.24000

We're the first (and only) inference-time epistemic safety layer.

Open source (AGPL-3.0) + commercial license available.

Ask me anything! 🚀

Swytchcode

Super impressive. Hallucination detection is a huge gap in most AI workflows.

Do you also expose this via public APIs?

@chilarai Thanks! We agree 100%. Hallucination is really one of the biggest problems today.

Yes, we have Huggingface and API Rest ready for testers, researchers and students.

→ HuggingFace: https://huggingface.co/spaces/gnai-creator/AletheionGuard

→ Docs: https://aletheionguard.com/docs

For commercial/closed-source use, see our dual licensing options.

Swytchcode

@aletheion_agi Awesome. Can we connect on LinkedIn to explore potential marketing collaboration?