Reviews praise Claude Code for strong reasoning, long-context handling, and clean, style-aware patches that speed up refactors, tests, and frontend work. Makers of , , and credit it with faster iteration, day-to-day development, and even powering core systems. Other makers highlight reliable pair-programming, context across files, and reduced manual work. Users echo intuitive UX and reliable output, though a minority expected more agentic autonomy. Overall, sentiment centers on productivity, coherence on large codebases, and dependable, senior-like guidance.

Security tools have gotten good at spotting patterns. But attackers don’t think in patterns, they think in systems.

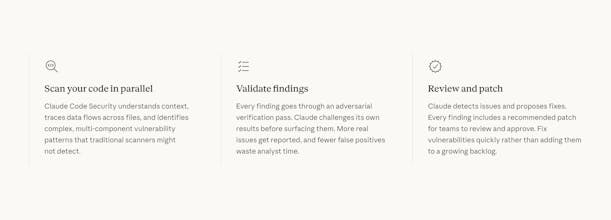

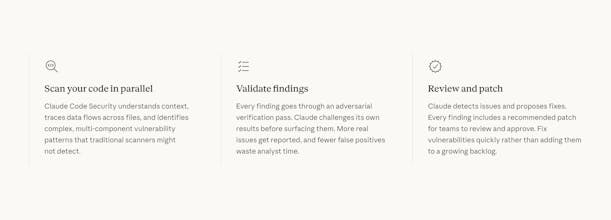

Claude Code Security reasons through your entire codebase like a human security researcher. It traces data flows, understands business logic, challenges its own findings, and then proposes targeted patches for human approval.

Not just “here’s a vulnerability.” But “here’s why it matters and here’s a fix.”

The adversarial self-verification step is especially interesting. Fewer false positives. More signal. Less wasted analyst time. Released in limited research preview, this feels like a shift from static scanning → contextual reasoning.

Hot question: does this make AI code review tools obsolete?

Short answer: not immediately.

Long answer: tools focused on PR reviews and developer productivity operate earlier in the lifecycle. Claude Code Security feels deeper, more like an AI security researcher auditing entire systems.

If it continues improving at finding novel, logic-level flaws (not just surface bugs), it could compress a huge amount of specialized security review work.

Obsolete? Probably not overnight.

Pressure on them to evolve? Absolutely.

What do you think... augmentation layer or category killer?