Knapsack App

A safe & simple OpenClaw app for Macs

43 followers

A safe & simple OpenClaw app for Macs

43 followers

We have integrated the Clawdbot / Moltbot / OpenClaw library into a Mac app, added a private notetaker, emailing automation, and some safeguards, and open-sourced it.

This is the 2nd launch from Knapsack App. View more

Knapsack OpenClaw App

Launching today

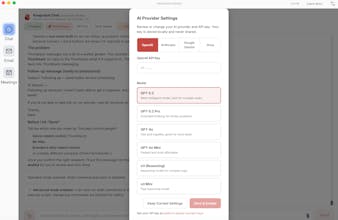

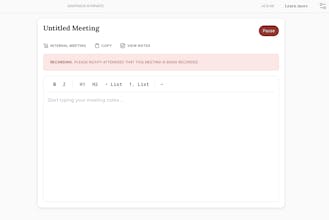

Knapsack is an open-source desktop client for OpenClaw / Clawdbot / Moltbot — the AI agent that controls your browser and acts on your behalf. Giving AI that power is dangerous without guardrails. Knapsack adds them: sensitive path blocking (SSH, credentials, .env), prompt injection defense, tool loop limits, mandatory confirmation before sending messages or spending money, and sandboxed scripting. Also bundles a private notetaker, email autopilot, and multi-LLM support. Local/open source.

Free

Launch Team / Built With

Intercom — Startups get 90% off Intercom + 1 year of Fin AI Agent free

Startups get 90% off Intercom + 1 year of Fin AI Agent free

Promoted

Maker

📌Report