Official Z.ai platform to experience our new, MIT-licensed GLM models (Base, Reasoning, Rumination). Simple UI focuses on model interaction. Free.

This is the 6th launch from Z.ai. View more

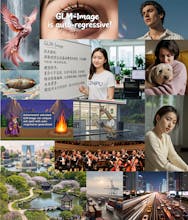

GLM-Image

Launching today

GLM-Image combines a 9B Auto-regressive model with a 7B Diffusion decoder. This hybrid architecture excels at knowledge-dense generation, perfect for posters, diagrams, and precise text rendering. Open-source and ready for T2I & I2I tasks.

Free

Launch Team

Okara

Okara

Flowtica Scribe

Hi everyone!

The architecture here is what caught my eye immediately. We have been debating "Autoregressive vs. Diffusion" for a long time in the generative AI space, but GLM-Image just said: "Why not both?"

It uses a 9B AR model (basically a modified LLM) to handle the "logic", like layout, complex prompt understanding, and text planning, and then passes that to a 7B Diffusion decoder to handle the "art" and high-frequency details.

This approach seems to directly address the biggest weaknesses of current pure diffusion models: accurate text rendering and complex spatial relationships. The benchmarks on both English and Chinese text accuracy look incredible.

Haven't tried the local deployment yet. The hardware requirements look a bit heavy but definitely promising for the quality. And I am sure the quantized versions are on the way.

Wow this is huge, okay I'm adding this to ImageRouter right now! You guys are unstoppable!

How can I get higher API limits?