Orbit AI

Feature-level AI observability using real runtime data

13 followers

Feature-level AI observability using real runtime data

13 followers

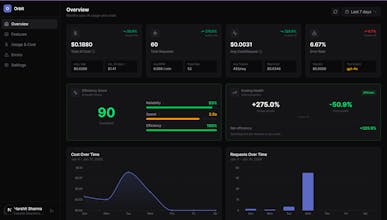

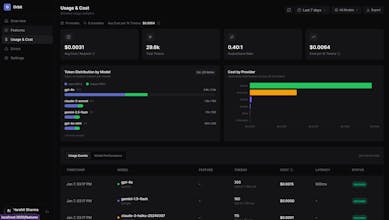

Orbit helps teams understand how AI behaves inside their product. Vendor dashboards show usage by model or API key. Orbit connects AI usage to product features using SDK-based runtime data. Track cost, latency, errors, and usage at a feature level — using real production data, not estimates.

Hello Product Hunt 👋

I’m excited to share Orbit, an AI observability tool built for teams running AI features in production.

Orbit started from a common problem we ran into while shipping AI-powered products:

vendor dashboards show usage by model or API key, but they don’t show how AI actually behaves inside a product.

Once AI is embedded across multiple features, it becomes difficult to answer practical questions like:

Which product features are driving AI cost?

Where does AI latency impact user experience?

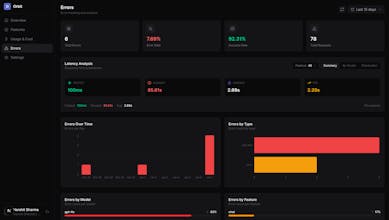

Which features are failing or degrading in production?

Why we built Orbit

Existing tooling focuses on providers and models, not on product features.

As a result, teams often lack feature-level visibility into AI cost, performance, and reliability.

Orbit was built to close that gap by capturing real runtime data from production and tying AI behavior directly to product features.

How Orbit works

SDK-based instrumentation

Capture AI metrics directly from application code — no proxies, traffic interception, or scraping.

Feature-level visibility

See cost, latency, error rates, and usage broken down by feature, environment, and model.

Real production data

All metrics are derived from actual runtime events, not estimates or sampling.

Free to get started

No credit card required.

Thanks for checking out Orbit.

Feature-level slicing is exactly what most teams are missing because “per model” doesn’t map to product decisions

@rahul_ranjan29 Yeah, exactly. Model-level stats are useful, but they don’t tell you which product decisions are actually driving cost or risk.

interersting use case. grafana of ai agents basically

@mrinal_singh8 Thanks, not just a dev tool but could be tailored for devs, PM,s and Finops team to provide a greater set of visibility