Sudo is a unified API for LLMs — the faster, cheaper way to route across OpenAI, Anthropic, Gemini, and more. One endpoint for lower latency, higher throughput, and lower costs than alternatives. Build smarter, scale faster, and do so with zero lock-in

Sudo AI

👋 Hey Product Hunt!

I’m Ventali — I’ve been building in AI since before GPT-3, and one thing has always bugged me: the AI development stack is fragmented and clunky.

• Inference is expensive (and unpredictable).

• Managing context data and memories is complicated.

• Billing end-users requires complex tracking

• There are very few ways to monetize

So we built Sudo:

⚡ One API to route across top models — faster routing, lower costs, zero lock-in

💽 Context management system (CMS) to turn AI apps into stateful, knowledgeable, memory-aware agents

💳 Real-time billing (usage, subscription, or hybrid) — we will charge your end-users for you

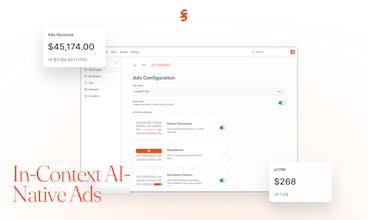

📈 Optional AI-native ads — contextual, policy-controlled, and personalized

The beta is live now with routing + dashboards. Context + billing + ads will be toggling on during the beta window.

✨ To thank early testers, we’re giving a 10% bonus credit purchased as well as $1 of free credits when you first sign up so you can test the API immediately.

We’d love your feedback:

• What feels clunky?

• What’s missing for your use case?

• What would make Sudo a true drop-in for you?

🔑 Get a dev key: https://sudoapp.dev

📚 Docs: https://docs.sudoapp.dev

💬 Discord: https://discord.gg/UbPf5BgrfK

Web to MCP

@ventali Congratulations on the launch. I'm just curious, are there any significant differences between Sudo & OpenRouter?

Sudo AI

@thepmfguy Hi Gaurav - nice to meet you and great question! Sudo and OpenRouter are both unified APIs for LLMs. The big differences are:

• Performance & cost → we benchmarked Sudo directly against OpenRouter, and Sudo delivers faster routing + lower cost across models like DeepSeek, GPT-5-nano, Grok-4-0709, Gemini-2.0-Flash, Claude-3.7-Sonnet, etc. (benchmarks here: https://github.com/sudoappai/sudo-benchmarks)

• Vertical features → we’re adding context management (so apps can be stateful + memory-aware) and monetization tools (like in-context ads + built-in billing). All through a single API endpoint.

Curious — from your perspective, is performance or developer-experience tooling more important for you right now?

Mom Clock

Congrats on the launch, Ventali! Does Sudo AI work like OpenRouter?

Sudo AI

@justin2025 Thanks Justin! Great question. In spirit, yes — Sudo is also a unified API for multiple models. The difference is that we provide faster routing on (DeepSeek, GPT-5-nano, o3, Grok-4-0709, Gemini-2.0-Flash, Claude-3.7-Sonnet, etc.) and we’re lower cost than alternatives (billing at cost + giving 10% bonus credits to early users).

On top of that, we’re building vertical features for app developers — like context management at the app level (to make agents memory-aware) and in-context monetization (optional, policy-controlled ads) — all through a single API endpoint.

Curious: when you’ve used OpenRouter (or similar), what’s felt missing for your use case?

LiveDocs

I've been following this project for a while, and the vision is exciting! Congrats to the team for shipping!

Sudo AI

@arslnb Arsalan!! Thanks so much for following along and for the kind words 🙏 We’re excited to finally get Sudo into developers’ hands.

Out of curiosity — what’s the part of the product you’d be most excited to try first (faster/cheaper routing, context management, billing, or ads)? Any feedback there would really help us shape the roadmap.

Congrats on the launch! 🚀 Developer stacks for AI are getting more complex, and Sudo AI is tackling real pain points. How does Sudo’s unified API optimize routing across providers like OpenAI, Anthropic, and Gemini, and what are the efficiency gains for latency, cost, or throughput? Can you share how the Context Management System enables stateful, memory-aware agents, and how real-time billing or AI-native ad monetization options work for app builders?

Sudo AI

Hi@sneh_shah! Our Rust-based backend and optimized server infrastructure ensures that we are competitive on latency and throughput with the best LLM routers on the market. Our benchmarks are open-sourced.

Our context management system (CMS) will be a feature that goes the extra mile that other AI developer platforms don't. Right now, LLM APIs don't have out-of-the-box features that let you connect knowledge bases to the agents and AI apps you build -- we are going to change that. Sudo will be the first LLM router with a managed Vector DB service, RAG for your agents and AI apps, memories via temporal knowledge graphs (tKG), and automatic context window optimization.

Real-time billing: billing end-users is a pain point that a lot of developers run into, especially in the landscape where model prices fluctuate so frequently. When we launch monetization for AI calls, Sudo will be able to bill your end-user for you, at your desired markup rate. All you'll have to do is authenticate your users with Sudo, with our provided SDK, and provide us with their user ID when you call the Sudo API. We'll charge their account, and you'll get paid. You never have to worry about changing model prices or integrating payment platforms again.

AI-Native Ads: Just like browsers and websites monetized traffic with ads, we believe the percolation of AI usage will drive costs down to zero as cost-sensitive customers demand free options, and AI provider companies burn through cash. Advertising will inevitably be a desired way to monetize a consumer-facing AI app or shopping agent, and we're 60% done building our own contextually-relevant product placement system for AI responses. In addition to that, we're experimenting with banner ads and other formats, all of which will be contextually relevant to the AI prompt and conversation. Developers will be able to simply toggle this option on, and get paid their publishing revenue.

What a lovely product @kevin @0xphoenice @ventali 🔥🔥🔥

Sudo AI

@kevin @0xphoenice @bhautikk Thanks so much for your kind words Bhautik!

Congratulations on the launch!! What an accomplishment.. I already have a few friends recommending me this product!! Really love how much faster routing will be.

Sudo AI

@jasmine_karkafi Thanks Jasmine!! What are you using router for / what are you building?

Magiclight

Love the one endpoint for all LLMs idea — super practical. Wishing you a successful launch! Curious: do you plan to expand support to open-source models too?

Sudo AI

@jason123 Yes absolutely — we’re expanding support to open-source models alongside adding multiple providers, and we’re also considering hosting to make access even smoother.

Out of curiosity, which open-source models would you most like to see added first?