toon-parse

Fit 40% more context into your LLM prompts for free

2 followers

Fit 40% more context into your LLM prompts for free

2 followers

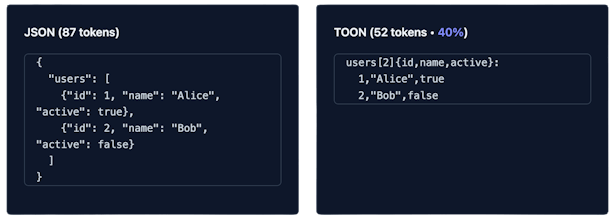

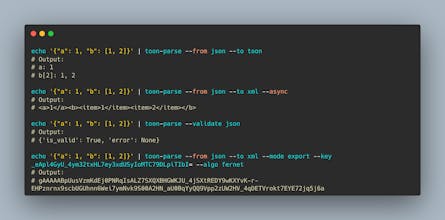

JSON is a "token tax" on AI apps. TOON slashes token usage by 40%, effectively expanding your LLM context window. Key Features: 1. CLI Utility: A powerful terminal tool for instant data conversion, encryption, and validation. 2. Secure Middleware: Production-ready pipelines with Fernet (AES-128) or XOR encryption. 3. Mixed-Text Support: Automatically extracts data hidden in messy LLM responses. 4. Unified Hub: Convert between JSON, XML, YAML, CSV, and TOON. Stop wasting tokens on syntax.

Hi Product Hunt! I’m Ankit, the creator of TOON Parse.

Recently, I came across this new data format called TOON(Token-Oriented Object Notation). It claims to reduce your token usage and when I tested it, the claims truned out to be true. But to use it in projects, when I searched for available tools, they were either not stable or were very basic. So, I decided to build my own Automation Suit and toon-parse was born.

The Problem: JSON was made for machines, not for token-limited LLMs. It wastes space, hits context windows too early, and costs too much.

The Solution: TOON (Token-Oriented Object Notation). It represents the exact same data model as JSON but with significantly fewer tokens.

What makes TOON Parse different?

📉 Token Optimization: Up to 40% reduction out of the box.

🛠 Unified Converters: Move between JSON, XML, YAML, and CSV effortlessly.

🔐 Production Ready: Built-in secure middleware encryption/decryption (Fernet/XOR) within your conversion pipeline.

🧠 Mixed Text Support: It can 'find' data inside messy LLM conversational responses and parse it automatically.

⚡ Async First: Built for modern high-performance Python backends (FastAPI, LangChain).

💻 CLI Utility: Convert and validate data directly from your terminal.

It’s open-source and ready for your Python stack today. I’d love to hear your thoughts on the Project and looking forward to people willing to contribute!

Try Playground: https://toonformatter.net

Token count reduction proof: https://platform.openai.com/tokenizer

[Using official tool to get real calculations instead of assumptive 1 token = 4 words]