Launching today

Toolspend

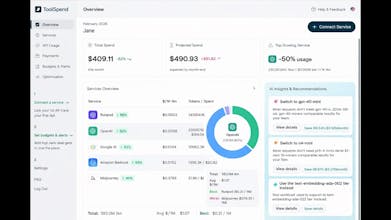

Track AI spend, usage, and cost across tools

238 followers

Track AI spend, usage, and cost across tools

238 followers

Stop losing money on forgotten SaaS subscriptions and "ghost" licenses. Toolspend is the ultimate command center for your stack, designed to give you 100% spend visibility without the manual upkeep. While other tools just list your apps, Toolspend deep-dives into your actual usage and spend patterns. We identify underutilized seats, detect duplicate tools across teams, and alert you before every renewal. Toolspend helps you automate the toil of procurement so you can focus on building!

Toolspend

Hey Product Hunt

AI tools are exploding inside companies.

What isn’t exploding? Visibility into what they actually cost.

Teams are subscribing to ChatGPT, Claude, Midjourney, Cursor, Perplexity, ElevenLabs… and finance only finds out when the bill hits.

The real problem?

AI usage (tokens) and actual spend are completely disconnected.

That’s why we built ToolSpend.

It connects your AI services + banking data and shows:

• What you're really spending

• Which teams are driving usage

• Where you’re overpaying

• Smarter model alternatives

AI shouldn’t be the next AWS surprise bill.

Excited to hear your feedback — especially from founders & dev teams already scaling with AI

Lancepilot

Hey Product Hunt 👋

We’re the makers of ToolSpend - and we built this because we ran into the same problem ourselves.

Inside our own team, we were using ChatGPT, Claude, Midjourney, Cursor, Perplexity AI, and ElevenLabs across different projects.

Everyone was moving fast.

No one knew what we were actually spending.

Engineering saw token usage.

Finance saw card charges.

Those two worlds never met.

We realized AI spend is fundamentally different from traditional SaaS:

Usage (tokens) ≠ invoices

Teams experiment constantly

Model pricing changes fast

“Just $20/month” tools multiply quickly

So we built ToolSpend to connect AI services + banking data into one clear view:

Real AI spend across providers

Usage by team/project

Overlapping subscriptions

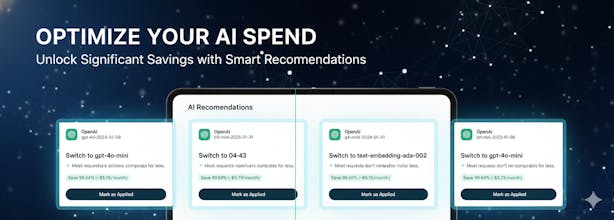

Smarter / cheaper model alternatives

Our goal: make AI spend observable before it becomes your next surprise bill.

We’re early and building this with founders & dev teams who are scaling fast with AI.

We’d love your honest feedback:

What’s hardest about managing AI spend today?

What metrics do you wish you had?

What would make this a no-brainer to adopt?

Thanks for checking us out 🙌

Meet-Ting

@priyankamandal The chokehold I'm in with all our tools is bananas.

Toolspend

@priyankamandal @dbul 😂 I feel this.

That “we’ll just try one more AI tool” phase turns into 15 subscriptions real fast.

That’s exactly the chokehold we’re trying to fix with ToolSpend — visibility before the month-end surprise hits.

@visagar This is such a smart niche to go after! 👏 I (well, the whole team) use a lot of AI tools daily, and tracking what we’re actually spending manually… it gets messy fast. Love the idea!

I’m curious – how granular can the team-level visibility get? Can you attribute usage down to specific projects or cost centers, or is it mainly per tool / per team right now?

Toolspend

@tereza_hurtova Love this question and honestly… I’ll give you the real answer.

Right now, it’s strong at:

Per tool visibility (OpenAI, Anthropic, etc.)

Per API key / account

Team-level rollups

Token + cost breakdown where the provider allows it

But project-level / cost-center attribution?

That’s where we want to go — and we’re figuring that out with users.

Truthfully, we didn’t want to overbuild assumptions about how teams structure AI spend. Some companies use separate API keys per project. Others share keys across everything. Some think in “cost centers,” others think in “clients,” others just want “who ran this model and why did it spike?”

So instead of guessing the perfect structure upfront, we’re starting with clear usage visibility and letting real usage patterns guide the next layer.

What we’re already seeing:

Even one provider (like OpenAI) behaves like 5–10 different cost centers depending on models, environments, and use cases. That’s the real chaos we’re trying to bring clarity to.

So short honest answer:

Granular at tool + key + team level today.

Project-level attribution is something we’re actively shaping based on feedback like yours.

If you had your ideal setup — would you want spend broken down by client, by internal product, or by something else entirely?

@visagar Appreciate the transparent answer! That approach makes a lot of sense to me. We’re building our product too, and I relate to that tension between the long-term vision and what’s realistic today. There’s always the “ideal structure” in your head… and then there’s the messy reality you discover through users. 😀

Starting with clear visibility and letting real usage patterns shape the next layer feels like the right call.

If I imagine an ideal setup, I’d probably want flexibility – the ability to view spend by client and by internal product/project.

In our case, cost centers shift depending on whether we’re experimenting, building core features, or running infra. So being able to re-group dynamically would be powerful.

And you’re right – even one provider can behave like multiple cost centers. That’s exactly where the chaos starts.

Excited to see how you evolve the attribution layer! 🙂

Lancepilot

Excited to hunt ToolSpend today 😎

Teams are rapidly adopting tools like ChatGPT, Claude, Midjourney, Cursor, and more but visibility into actual AI spend is lagging behind.

AI usage (tokens) and real cash out the door rarely live in the same place. That’s the gap ToolSpend is solving by connecting AI services with financial data to show what you’re truly spending and where you can optimize.

If you're scaling with AI, this is a problem worth paying attention to.

Congrats to the team on the launch 👏

Who you gonna call? Ghost-license busters!:D 2026 is officially the year of too many AI subscriptions. Love the model alternative feature. Any plans for a one-click cancel button inside the dashboard?

Toolspend

@kostfast Thanks, Kostia — glad you like the model alternative feature.

A one-click cancel action is something we’re considering. Because cancellation flows differ by provider (and aren’t always supported via API), we’re prioritizing a safe “disconnect + stop spend” workflow first, then adding one-click cancel where possible. Which providers would be most useful for you?

@papuna_giorgadze1 OpenAI, Anthropic, and Midjourney for sure. We switch AI tools so fast these days, so a one-click move for the whole stack is exactly what we need. Good luck with the build!

minimalist phone: creating folders

I think it is kinda useful for people who have like million subscription plans. :)

Toolspend

@busmark_w_nika You’re right — at first glance it feels like this would only help people juggling a ton of subscriptions

But what we’re seeing is something slightly different.

It’s about how even one AI provider now behaves like 10 different cost centers.

Take OpenAI as an example. A single team might be using:

• GPT-4o for their customer-facing chatbot

• GPT-4o mini for internal automation scripts

• GPT-4 Turbo for long-context document processing

• Embeddings API for semantic search

• Whisper for call transcription

• Image generation endpoints for marketing assets

• Assistants API with tool calls for internal workflows

On the invoice, that all shows up as one line item: “OpenAI.”

But operationally, each of those is a separate cost driver.

For example:

– A developer switches a background job from GPT-4o mini to GPT-4o “temporarily”

– An embeddings process runs more frequently than expected

– A support bot accidentally defaults to a higher-cost model

– A script forgets to cache responses

Suddenly the invoice jumps — and no one knows exactly why.

ToolSpend breaks usage down by model, endpoint, and token consumption so you can see what’s driving spend and whether a cheaper alternative could achieve similar results.

So it’s less about having a million subscriptions — and more about visibility inside the ones you already rely on.

Lancepilot

Toolspend

@iftekharahmad Thanks! 🙌 Honestly, it started as a way to understand our own AI spend better. Now we’re opening it up and learning from how teams actually use it.