Stimm

A modular, real-time AI voice assistant platform

4 followers

A modular, real-time AI voice assistant platform

4 followers

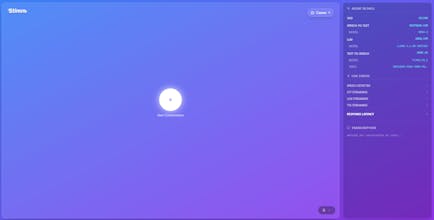

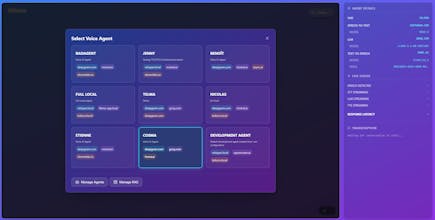

The OpenA modular, real-time AI voice assistant platform built with Python (FastAPI) and Next.js. This project provides a flexible infrastructure for creating, managing, and interacting with voice agents using various LLM, TTS, and STT providers. Source Voice Agent Platform. Orchestrate ultra-low latency AI pipelines for real-time conversations over WebRTC. - stimm-ai/stimm

Free

Launch Team / Built With

Webflow | AI site builder — Start fast. Build right.

Start fast. Build right.

Promoted

Maker

📌Report