Neurnav

Clarifies when, where, who, how, and what was written.

4 followers

Clarifies when, where, who, how, and what was written.

4 followers

People often assume products like this are easy wins. They’re not. Neurnav is slow to build, slightly irrational, and powered by obsession. It’s mostly just me (and a few friends) caring way too much about document diffs, version history, and where text actually comes from. From the outside, it probably looks like a bad idea. From the inside, it’s genuinely fun. I didn’t build Neurnav to chase numbers. I built it because I love experimenting and making tools that feel right. 😈

🚀 Show HN: Neurnav

Because “Did you use AI?” is the wrong question

Okay.

So your entire evaluation system falls apart because you can’t verify authorship?

✅ That’s not an AI problem.

❌ That’s YOUR problem.

---

What really pisses me off 😤

They act like:

…is some kind of axiom.

Calculator ≠ “you don’t understand math”

Compiler ≠ “you don’t understand code”

So why is AI different?

---

🔥 The real question isn’t “did you use AI?”

It’s:

Can you explain your reasoning?

Can you defend your work?

Do you understand what you submitted?

---

🧠 So I built Neurnav

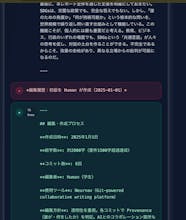

Every edit — human or AI — gets tracked with Git-level provenance.

You can see exactly who changed what, when, and why.

---

Why?

I was already doing this with local Git repos for my own sanity…

…but constantly creating repos and pushing to GitHub was painful.

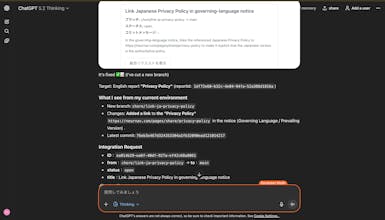

So I wrapped Git into a product that non-technical people can actually use.

---

🧰 Tech stack

Next.js 15

One Git repo per document

Branching/merging UI

Provenance metadata in every commit

---

If your institution is still having braindead “AI yes or no” debates,

maybe transparency is the actual answer.

### ✉️ Feedback welcome.