Grok-os

Open source release of xAI's LLM

216 followers

Open source release of xAI's LLM

216 followers

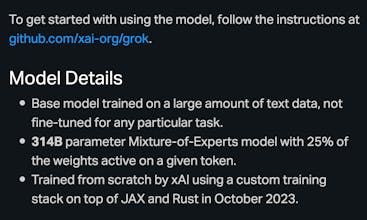

This is the base model weights and network architecture of Grok-1, xAI's large language model. Grok-1 is a 314 billion parameter Mixture-of-Experts model trained from scratch by xAI.

Raycast

Product Hunt

Crewlix

file.coffee

Crustdata

Retexts

ShipFast-ASP.NET