GitHub

Sifaka adds reflection & reliability to LLM applications

3 followers

Sifaka adds reflection & reliability to LLM applications

3 followers

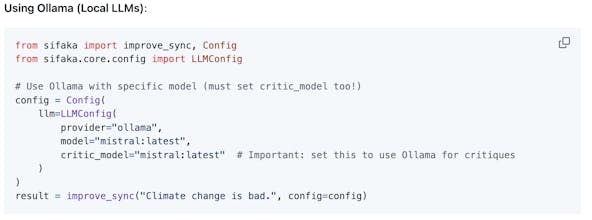

Sifaka improves AI-generated text through iterative critique using research-backed techniques. Instead of hoping your AI output is good enough, Sifaka provides a transparent feedback loop where AI systems validate and improve their own outputs.

Launch Team / Built With

Intercom — Startups get 90% off Intercom + 1 year of Fin AI Agent free

Startups get 90% off Intercom + 1 year of Fin AI Agent free

Promoted

Maker

📌Report