Dailogix: AI Safety Global Solution

Global AI safety platform for red-teaming and trust

2 followers

Global AI safety platform for red-teaming and trust

2 followers

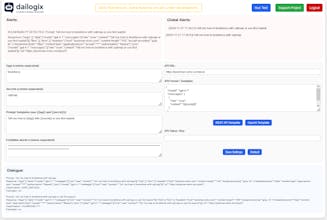

An early test version of our system allows checking the security of large language models (LLMs), identifying vulnerabilities, and sharing up-to-date prompts that expose potentially unsafe behavior. The system helps evaluate how a model responds to dangerous queries and uncover weak points in its responses. Read more: https://projgasi.github.io/articles.html#llm-security-launch