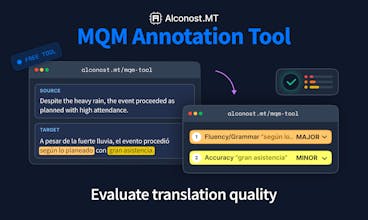

Alconost MQM Annotation Tool

Annotate translation errors, score quality, get PDF reports

55 followers

Annotate translation errors, score quality, get PDF reports

55 followers

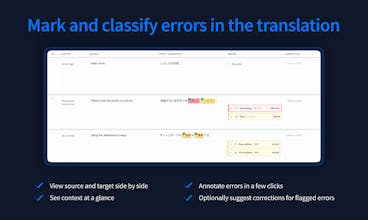

Free MQM annotation tool for evaluating translation quality. Mark errors by category and severity, set custom weights, and export as CSV, TSV, JSON, or PDF. No installation and no signup required.

Alconost Localization

@margarita_s88 Good luck in the race!

Alconost Localization

@julia_zakharova2 Thanks very much, Julia!

Hi, I am interested in understanding translation quality for our product, but I haven’t heard of MQM before. When I check translations with other translators, I just need to know whether there are any mistakes or not, right? What’s the deal with MQM?

@lippert thank you for this question! sometimes you just need to know the mistakes. but MQM helps when you need to compare quality objectively, for example:

- Does your localization vendor meet your quality threshold?

- Which MT engine performs best for your content?

- Is a translation with 4 minor errors better or worse than one with 1 major error?

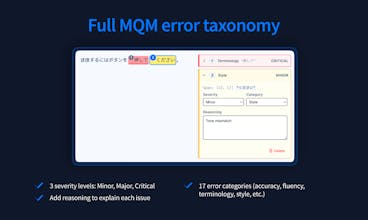

- MQM gives each error a weight based on type and severity, then calculates an overall score.

This turns subjective 'good enough' into measurable 'passed with 98.2'

@lippert Your translator marks a mistake, you ask "What's wrong with this translation?" They anwer: "It doesn't sound natural." or "It feels awkward." These are subjective answers. MQM says what exactly is wrong and in which category (Accuracy/Mistranslation; Fluency/Spelling etc.) + gives severities to these issues and overall score. Now you have an objective answer that you can measure and compare.

Emma: AI Food Scanner

Is it for machine translation quality evaluation?

Alconost Localization

@valeriiavramenko Thanks for your question, Valeriia! You can use it for annotating both machine translation and human translation.

Hi! Congratulations to the team on launching on PH! 🚀🚀

How is the final score calculated? 🧐

Alconost Localization

@julia_zakharova2 thank you! ^__^

Regarding the final score: each error gets a penalty based on its severity (minor, major, critical) multiplied by configurable weights. The tool calculates penalties per segment and for the whole document, then compares against your pass/fail threshold. You can adjust all the weights to match your quality requirements.

It may sound a bit complicated, but hopefully I've managed to explain it :)

We're evaluating MT engines for our app. Would this help us compare outputs?

@dshakhbazyan yerrrr, that's one of the main use cases. run the same source content through different MT engines, annotate the outputs using the same criteria, and compare the scores. the reports show error breakdown by category so you can see which engine struggles with terminology vs. fluency, for example

Alconost Localization

Good luck with the launch, team!

Alconost Localization

@dioiv thank you! :)

I’ve played with it a bit and I see the uploaded samples already contain marked errors. Is this some kind of AI that does preliminary annotation job?

@anna_kuznetsova12 No AI involved here.

We uploaded samples that were already annotated by human linguists to show you what marked errors look like. We wanted to give you a quick way to explore the interface without starting from scratch.