Parallax by Gradient

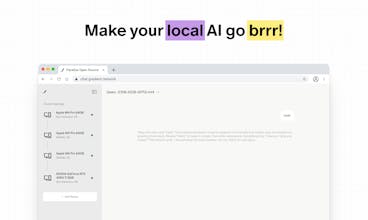

Host LLMs across devices sharing GPU to make your AI go brrr

845 followers

Host LLMs across devices sharing GPU to make your AI go brrr

845 followers

Your local AI just leveled up to multiplayer. Parallax is the easiest way to build your own AI cluster to run the best large language models across devices, no matter their specs or location.

Parallax by Gradient

Hello Product Hunt 👋,

Everyone loves free, private LLMs. But today, they’re still not as scalable or easy to use as they should be.

We’ve always felt that local AI should be as powerful as it is personal, and this is why we built Parallax.

Parallax started from a simple question: what if your laptop could host more than just a small model? What if you could tap in to other devices — friends, teammates, your other machines — and run something much bigger, together?

We made that possible. It’s the first framework to serve models, fully distributedly, across devices, regardless of hardware or location.

No one will ever be gpu-poor again!

In benchmarks, Parallax already surpasses other popular local AI projects and frameworks, and this is just the beginning. We’re working on LLM inference optimization techniques and deeper system-level improvements to make local AI faster, smoother, and so natural it feels almost invisible.

Parallax is completely free to use, and we’d love for you to try it and build with us!

@rymon Wow! This is the project I was "hunting" for so long! Thank you Gradient for building this and open sourcing it. Here comes the wave of innovators. I see so many possibilities - are you partnering with HuggingFace, Apple/MLX, Ollama...? Would love to hear about your roadmap and hopefully contribute to this lovely project.

Theysaid

This is wild. Love the idea of turning idle devices into something useful instead of letting that GPU power go to waste. The “no one will ever be GPU-poor again” line definitely hits 😄

Parallax by Gradient

@chrishicken Super exicted that you love our product! We would love for everyone to eventually owns their models & memory & agents. We believe this marks an important steps towards personal sovereignty.

Parallax by Gradient

@chrishicken Thanks for the support Chris, totally agreed with you! Besides that, this architecture allows for sovereign, privacy-preserving AI. Users can host personal or organizational models locally while still participating in a global computation mesh. 😄 try it, you'll love it!

Parallax by Gradient

Hi everyone, this is Eric, co-founder of Gradient.

The Gradient team behind Parallax is a group of engineers and scientists driven by a shared belief that open intelligence will be one of humanity’s most important assets.

And that’s why we are working on foundation models and rebuilding the training & serving stacks to push the frontier of open intelligence.

In short, Gradient focuses on the creation and delivery of open intelligence.

Parallax is our first step in making access to intelligence feel as easy turning on a light.

And we have a lot more exciting stuff coming up so stay tuned!

To contribute to Parallax: https://github.com/GradientHQ/parallax

To learn more about us: https://gradient.network

Mom Clock

Excited to hunt Parallax today!

It’s the first framework I’ve seen that makes distributed local inference feel effortless — no more GPU bottlenecks. Big milestone for open intelligence, congrats Gradient team 👏

Parallax by Gradient

@justin2025 Thank you for hunting Parallax and for the kind words!

That's exactly what we target to deliver with Parallax: a unified and scalable experience to power your sovereign AI modes and applications.

What we are especially proud of:

3 modes, 1 fabric, all sovereign. Local host with a single machine; Co-Host over LAN with friends and teammates; Global Host on a wide area network (WAN) across locations.

Deploy on a Mac+PC mix, no public IP required, traceable runs end to end.

Supporting 40+ open models on launch, from 0.6B to trillion-class MoE.

We would love to hear what you build and what can be made smoother. Issues and PRs welcome.

Thanks for helping push open intelligence forward with us!

Parallax by Gradient

@adamy_gn So happy to launch Parallax yesterday!

Mom Clock

@adamy_gn Yeah, I happen to have a Mac Mini M1 idle, it will be my homework tonight :D

Parallax by Gradient

@justin2025 niiiiice!

Let us know how it goes🚀

Congrats on shipping this!

The messaging is sharp; I mean, ‘AI goes brrr’ has substance behind it. Love it!

Distributed compute made simple is a killer value prop if you nail onboarding and clarity around use cases.

Parallax by Gradient

@nosheen_kanwal Great suggestion! Feel free to reach out to us anytime!

@joysong_j Thanks, and sure. What is the best way to stay in touch?

Just followed you on LinkedIn

Agnes AI

Pooling devices for distributed LLMs is terrific.... my laptop always struggles solo :( Does Parallax handle dynamic connections if friends drop in and out mid-session? Would love to try out asap!

Parallax by Gradient

@cruise_chen we will add node auto-rebalancing very soon! so anyone can tap in and tap out mid-session. stay tuned!

Parallax by Gradient

@pavel_evteev Yes!Sovereignty is great!