Launching today

markupR

Open-source AI bug reports. Free forever, BYOK/local.

5 followers

Open-source AI bug reports. Free forever, BYOK/local.

5 followers

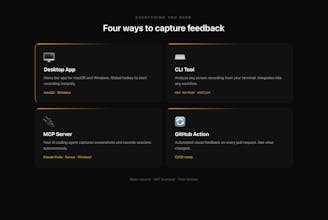

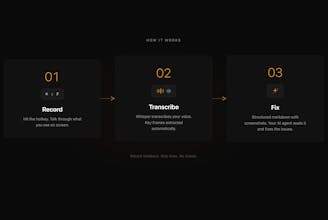

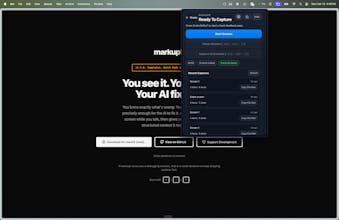

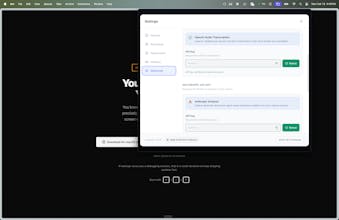

AI coding agents are blind. When you find a bug, you context-switch into writing mode — describing the layout, manually screenshotting, hoping the agent understands. markupr records your screen while you narrate what's wrong, then runs an intelligent pipeline that correlates your voice with the video to produce structured Markdown with screenshots placed exactly where they belong. Ships as a desktop app, CLI, MCP server for Claude Code/Cursor/Windsurf, and a GitHub Action. MIT licensed.

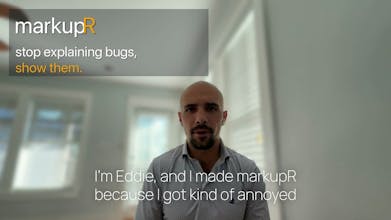

Hey Product Hunt — I'm Eddie, and I built markupR because the feedback loop between developers and AI coding agents could be better.

I was using Claude Code/Codex/Gemini daily and kept hitting the same friction: I'd find a visual bug, then spend 2-3 minutes writing a text description, screenshotting, cropping, and dragging images into the right order. I realized I could just talk through what I see in 30 seconds and have a tool do the rest.

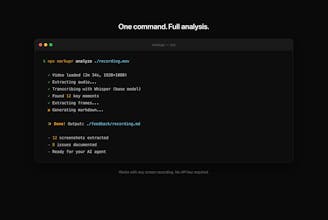

The breakthrough was timestamp correlation, mouse position tracking, and active window components. Instead of capturing screenshots on a timer, markupR uses Whisper to transcribe your voice, analyzes the transcript for key moments, then extracts video frames at those exact timestamps. Every screenshot in the output shows what you were looking at when you described the issue. Then it utilizes AI to analyze both of those and form an AI-readable report and file structure that allows your agent to very quickly identify and solve the issue(s) you're describing and seeing.

There is an MCP server so your Agent can call it and "sit with you" while you use an app, then quickly correct everything that came up.

It's fully open source (MIT), runs locally by default, and I'd love your feedback on what to build next.