Launching today

Sled

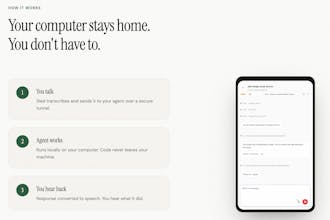

Run your coding agent from your phone, with voice

261 followers

Run your coding agent from your phone, with voice

261 followers

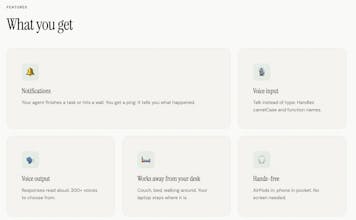

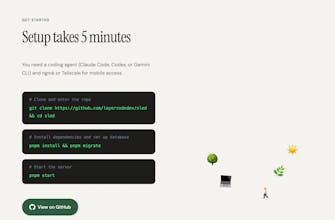

Sled lets you run your coding agent from your phone using voice. Coding agents need frequent input, but when you step away from your desk they just sit idle. Sled solves this by giving you a voice interface that connects securely to your local agent over Tailscale. Your code never leaves your machine. You talk, the agent works locally, and you hear the result read back. Works with Claude Code, OpenAI Codex, and Gemini CLI. Fully open source.

Survey Loop

@jack_bridger Hi Jack, congrats on the launch. This is brilliant in theory; tell me how you've solved the silly practical problems; driving/transit, looking up architecture/features and other deskbound stuff?

Survey Loop

@zolani_matebese Thanks! Good questions! I would say this is a supplement to desk work not a complete replacement. And of course I don't recommend driving with it but in theory it should be good anywhere it's safe to speak with voice.

PicWish

@jack_bridger congrats!

what happens if the speech to text hallucinates a command? does it have a dry run mode or confirmation step for high risk shell commands?

Layercode

@jack_bridger @mohsinproduct voice messages don’t auto send, so you can review transcribed text before sending it.

What happens if the agent needs code review or visual context?

Survey Loop

@lak7 for visual context, you can connect a browser skill and trust the agent to make a good choice. It's more for communicating high level tasks on the basis that you already trust it to do good stuff.

Swytchcode

Really awesome team! Super excited to try it out

Survey Loop

@chilarai Thanks mate, hope you're well!

Swytchcode

@jack_bridger I'm great. Really happy to see you topping the charts

solving the need to babysit local agents every 10–60 minutes is such a smart wedge. is this agnostic to the agent framework I'm using, or is it optimized for specific ones like OpenDevin?

Survey Loop

@samet_sezer Thanks! It's agnostic to any agent framework that supports ACP

Congrats on the launch.What about reviewing the code state

Survey Loop

@jeetendra_kumar2 right now it's focused on just telling the agent to do stuff and trusting them (though you can stop their activity completely). And less focused on reviews for this launch. But based on all the requests we should build in a great review flow!

Agnes AI

Sled is absolutely a cure for most of the vibe builders - human in the loop is necessary! Great launch team!

bunny.net

Well done, congrats on the launch!