Snyk the leader in secure AI software development, empowers organizations to build fast and stay secure by unleashing developer productivity and reducing business risk. The company’s AI Security Platform seamlessly integrates into developer and security workflows to accelerate secure software delivery in the AI Era. Snyk delivers trusted, actionable insights and automated remediation, enabling modern organizations to innovate without limits and secure AI-driven software for over 4,500 customers.

This is the 7th launch from Snyk. View more

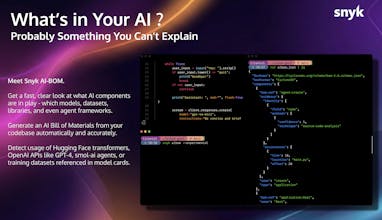

Snyk AI-BOM

The Snyk AI-BOM CLI maps the critical AI components powering your application, including AI models, datasets, and external services. It extends the traditional SBOM to create a clear inventory of everything your AI code relies on. Use Snyk AI-BOM to detect and map dependencies created via the MCP open standard, providing security and engineering leaders with the governance insights they need. Audit AI usage, track LLM providers, and ensure compliance with one command

Free

Launch Team

SecureVibe

SecureVibe

Snyk

Why we built the Snyk AI-BOM CLI

AI components like models, and external services are the new dependencies, and they are moving into production faster than we can track them. Engineering and security leaders kept asking us: "Which models are we actually using?" and "Are we compliant?" The answer was almost always, "We think it's these, but we have no central inventory." When an AI model is deprecated, a service changes its API, or developers adopt out-of-policy models, you need to know which of your apps is affected instantly. Waiting for a security audit to find out felt far too slow and way too risky.

What we're solving

Eliminating the AI Blind Spot: Snyk provides a comprehensive AI Bill of Materials (AIBOM) that goes beyond just pip dependencies to map the entire AI supply chain, including LLMs, datasets, MCP servers, and external API calls.

Governing the "Hidden" Connections: We specifically built in the ability to detect and map connections made via the MCP open standard, creating a clear dependency graph for services that your AI application relies on but often go unrecorded.

Answering Critical Questions Instantly: Instead of manual tracking or hunting through code, you can use the CLI to audit usage across your organization for specific frameworks, LLM providers, or potentially vulnerable AI components.

How we got here

The traditional Software Bill of Materials (SBOM) is crucial, but it was not designed for the complexities of modern AI applications. We realized that governance had to start with visibility. We prototyped a simple scanner to identify models and datasets, and quickly saw the need to map the services and tools they connect to. The core of this is the Snyk AI-BOM API, and we built it into the Snyk CLI to make that power accessible for any developer or security practitioner to run scans locally or integrate them easily into their CI/CD process. The goal is to make AI component tracking as routine and simple as tracking your open-source libraries.

What to try today:

Run the CLI in one of your Python projects that uses an LLM or an AI framework (e.g., PyTorch, LangChain).

See the resulting AIBOM and check the dependency chain. Look specifically for how it maps the external LLM provider or any MCP connections you might have.

Use the ai-bom-scan functionality (https://github.com/snyk-labs/ai-...) to find repositories in your organization that mention a specific model like deepseek or an API provider like anthropic.

We'd love your feedback on the clarity of the AIBOM output, how easily it fits into your existing security/governance workflow, and which AI frameworks you'd like to see better support for next.

Thanks for checking out Snyk AI-BOM CLI, excited to help you get control over your AI supply chain!

I am very interested to find out what the next steps will be. AI dependency mapping is not straightforward, and this might be the first tool to handle it in a production-like environment.

Snyk

@ana_popescu2 thanks for the interest Ana, totally relate to the problem of rogue AI and just plain old shadow AI being scattered all around in devs and infrastructure ;-)

At Snyk, we're building this first of all via the Snyk CLI which exposes an AI-BOM construct to provide detection findings via JSON or HTML format. We appreciate DevOps and Security Ops teams would initially favor this format for their own scripts and mechanics, but we're also future-looking with building discoverability into Evo - evo.ai.snyk.io

I recall asking someone just a year ago if they thought AI-BOMs would actually become a thing... Since then, I have been increasingly hearing from members of our community asking how they can determine what's running behind the scenes when they use third-party models. Great feature!

Snyk

@gerald_crescione1 oh yes, this is very naturally fitting to operations and security engineering orgs. You were ahead of everyone, Gerald. Nice! 👏

@liran_tal SREs are also very interested in that, actually.

Snyk

@gerald_crescione1 makes sense!

Can I integrate @Snyk with existing IDEs and Github Workflows ?

Snyk

@amir_tadrisi 100% yes Amir, here's how to get started with the IDE plugins: https://snyk.io/platform/ide-plugins/

If you're vibe coding then go straight to https://snyk.io/ai-vibe-check/ and get the Snyk Studio extension installed for Cursor or Claude Code or any of the other AI coding agents.

@liran_tal Awesome thank you Liran

Snyk

@amir_tadrisi anytime :-)

Product Hunt

Snyk

@de 🙏💪