Pongo

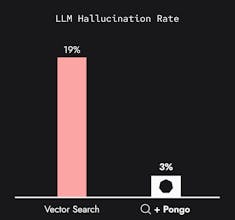

Reduce LLM Hallucinations by 80%

37 followers

Reduce LLM Hallucinations by 80%

37 followers

Pongo greatly improves accuracy for RAG pipelines using our semantic filter technology. This improved accuracy results in 80% fewer incorrect and/or partially correct answers from LLMs. Pongo integrates into existing RAG pipelines with just 1 line of code

CopilotKit