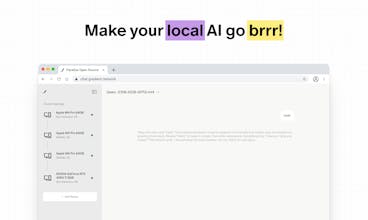

Parallax by Gradient

Host LLMs across devices sharing GPU to make your AI go brrr

839 followers

Host LLMs across devices sharing GPU to make your AI go brrr

839 followers

Your local AI just leveled up to multiplayer. Parallax is the easiest way to build your own AI cluster to run the best large language models across devices, no matter their specs or location.

YouMind

Really exciting idea — turning idle devices into a distributed inference cluster feels practical and privacy-friendly.

Quick question: how do you handle latency and bandwidth variability across WAN peers to keep inference smooth for real-time apps? Would love clarity on any built-in QoS or fallback strategies.

Nice Launch!

Parallax by Gradient

@janette_szeto Thanks for your support!

Kira.art

Love the vision. Curious if Parallax could work across both Mac and Linux devices in the same cluster.

Parallax by Gradient

@ccddz Yes! Parallax supports local cluster building despite hardware configurations. Heterogeneity is a core feature to make local AI fully scalable, and we will also support more hardware types in the future!

Kira.art

Can this run behind NAT or in environments without public IPs? Wondering how peer discovery works there.

Parallax by Gradient

Yes! our Lattica stack in Parallax does auto hole punching. For some use cases, such as exporting your local hosted endpoint API to public, may require public IPs for now. We will also work on supporting more networking scenarios!

Looks pretty great! I wonder if it's possible to add more models, as it seems like there are only two available right now.

Parallax by Gradient

@aemondsensei We have many available models if you host your own AI cluster! the two you see is a frontend chatbot interface that we built to showcase how parallax works. You should definitely try hosting your own cluster with parallax!

GraphBit

Really love what you’re building here, Parallax tackles distributed inference beautifully. We’re working on GraphBit, which focuses on the orchestration layer- making AI agents run reliably and concurrently once those models are deployed. Feels like what you’re building (how models run) and what we’re solving (how intelligence executes) could complement each other perfectly. Would love to explore possible collaboration! My email- musa.molla@graphbit.ai

Parallax by Gradient

@musa_molla Thanks for your kind words! Maybe we’ll have a chance to collaborate in the future

This is such a smart direction , distributed local AI cloud really change how people think about compute access .

Have you thought about adding a way for users to share or rent out idle GPU power within a trusted network ?

Parallax by Gradient

@farhan_nazir55 As more and more users start using Parallax, this is totally possible! Let‘s build together!