meter

Monitor changes on any website's data without infra overhead

2 followers

Monitor changes on any website's data without infra overhead

2 followers

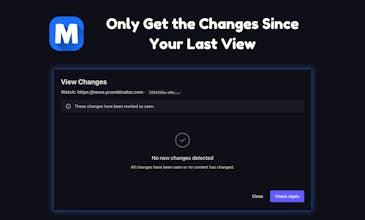

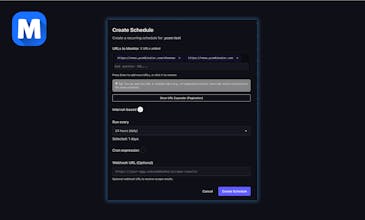

Scrape once. Know when it changes. I built Meter because I was tired of re-scraping pages that hadn't moved—and paying for it every time. Describe what you want in plain English. We handle antibot, proxies, retries, and all the infrastructure you'd rather not build. When real content changes—not ads, not timestamps, not layout noise—you get a webhook. Teams use it to monitor job boards, track competitor pricing, etc. One team cut embedding costs by 95% by only re-processing what's new.

I've written thousands of scrapers for different use cases, and I always wanted a tool that could go out and do most of the heavy lifting for me. The current tooling is too expensive at scale, and I wanted a tool that was pattern based. Most scraping use cases use the same pattern across many pages within a site, and meter takes advantage of that.

As an example, I used to extract forum posts from different sites and each page used the same selectors, but actually tracking what was new and what wasn't was the hard part. I simplified both the pattern generation and hosting/change detection of the scrapers with meter.

We have a free tier and a free trial for paid. Happy to do pilots for specific use cases. We have lots of users migrating from firecrawl and replacing their fragement scraping infrastructure.

We have some cool stuff on the roadmap and I'd love to connect with anyone interested.

Thanks for reading!