Launching today

Lyria 3 by Google Deepmind

Turn any photo or thought into a custom song inside Gemini

81 followers

Turn any photo or thought into a custom song inside Gemini

81 followers

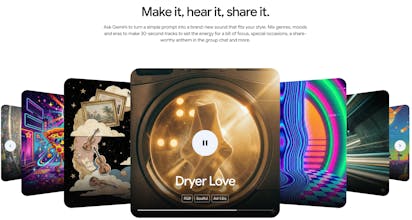

The Gemini app now features the most advanced music generation model Lyria 3, empowering anyone to make 30-second tracks using text or images in beta. Turn photos, vibes, or ideas into 30-second songs with vocals and cover art straight from the Gemini app/website.

I’m excited to hunt something that genuinely expands what “creative tools” mean in 2026.

Meet Gemini with Lyria 3: Google DeepMind’s most advanced generative music model, now built directly into the Gemini app.

This isn’t just another “type a prompt, get a beat” tool. It’s a full-stack AI music collaborator.

How Lyria 3 works?

Lyria 3 is a high-fidelity music generation model that lets anyone create 30-second custom tracks using:

Text prompts

Photos

Videos

Describe a vibe like: “A comical R&B slow jam about a sock finding their match” …and Gemini generates a polished, structured, catchy track, complete with lyrics and vocals.

Upload a hiking photo of your dog? It composes a song inspired by it.

This is music as expression, not production complexity.

Core features and capabilities

1️⃣ Text → Full Track

Genre, mood, tempo control

Lyrics auto-generated (or bring your own)

Realistic, nuanced vocals

Instrumental-only options

2️⃣ Image/Video → Soundtrack

Turn visuals into high-fidelity music

Mood-aware lyric writing

Perfect for social content

3️⃣ Creative Control

Direct vocal style

Specify acoustic preferences

Adjust complexity and arrangement

4️⃣ Professional-Grade Output

Clean, structured tracks

Export-ready audio

Custom AI-generated cover art

5️⃣ Built-In Safety & Watermarking

All tracks embedded with SynthID

AI content verification inside Gemini

Why Lyria 3 is different?

There are plenty of AI music tools out there. Here’s where this feels distinct:

Musicality, not just loop generation

No lyrics required

Multi-language support

Visual-to-Music pipeline

Responsible AI Layer

Use cases I am excited about:

Personalized birthday songs in seconds

Instant background music for Shorts/Reels

Community creators generating meme music

Emotional gifts (parents, partners, inside jokes)

Indie creators prototyping soundtrack ideas

Marketers testing vibe-based audio branding

This lowers the barrier between idea → emotional artifact.

My personal take: What’s powerful here isn’t that AI can “make music.” It’s that this removes friction between feeling something and expressing it musically.

Over to you!

Would you use this for content? For fun? Or as a serious creative tool? Let me know in the comments. :)

Has anyone gotten some good results with this they'd like to share? The generations feels pretty good, but still a bit slop-y.