Lovelaice

Multi-model AI testing, evaluation, optimization made simple

19 followers

Multi-model AI testing, evaluation, optimization made simple

19 followers

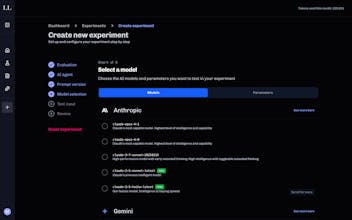

Test prompts across any AI model, evaluate results, and optimize performance in one platform. Compare OpenAI, Claude, Gemini and more side-by-side. Get clear rankings and prompt suggestions. Deploy the best version via API- no tech background required.

👋 Hi everyone, we’re Cata (serial entrepreneur, ex-CTO, founder, software developer with 14+ years of experience, 10 of them in startups) and Mada (product manager with 10+ years of experience). Over the last year we’ve supported more than 10 different teams trying to integrate AI into their products.

The Problem We Keep Seeing

Across 10+ AI projects, we've watched the same pattern repeat:

- CEOs get excited about AI → Quick initial test shows promise → Reality hits with edge cases and inconsistent results → Project gets abandoned or ships with suboptimal performance

Why? Because we're treating AI like traditional software development, when it's fundamentally different. Traditional code gives you the same output every time. AI is probabilistic - the same prompt can return completely different answers.

The "Copy-Paste Hell" Reality

Most teams today experiment in ChatGPT, then hand prompts to engineers expecting identical results. But ChatGPT has hidden system prompts that disappear when you integrate via API. Result? Weeks of back-and-forth between product and engineering teams, 35 different prompt versions in Notion docs, and zero systematic way to know what actually works.

Meanwhile, domain experts - the people who actually understand the business context - are completely locked out of the process.

Why Experimentation Isn't Optional in AI Product Building

New models drop every two weeks. Your prompt that worked last month might be outperformed by a newer, cheaper model today. Without systematic evaluation, you're flying blind. We've seen teams stick with their first implementation for months, missing 40% performance improvements simply because they had no efficient way to test alternatives.

Enter Lovelaice

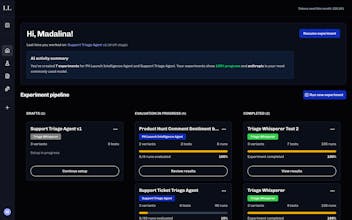

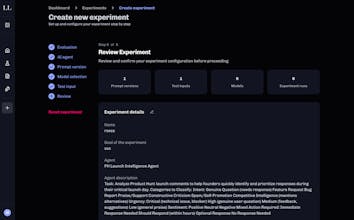

We built the platform we wished existed - where product managers, domain experts, and engineers can collaborate on AI development without the technical bottlenecks. Test any prompt across any model, evaluate results systematically, and deploy with confidence.

Free Beta Access

We're opening our beta to the Product Hunt community. Bring your API keys, and we'll handle the rest. Let's turn your AI experiments from chaotic guesswork into systematic optimization.

If you are overwhelmed by existing AI evaluation tools, but want to systematically measure your AI product's performance and make data-backed decisions, you are going to love Lovelaice.

Ready to unlock your AI's real potential? 🚀

Yoohoo. so exciting!!! Let's go! @Lovelaice

Super nice tool @catalina_turlea1 ! A few questions on production deployments:

- When models update weekly, can teams re‑run saved test suites and see statistically meaningful deltas (e.g., confidence intervals, effect sizes) rather than just rank changes?

- On deployment: if prompts are versioned and pushed via API, how do you manage rollout safety (staged rollouts, canary tests, rollback triggers) and audit trails for compliance?

- Finally, have you seen cases where a “worse” model by general benchmarks wins on real KPIs after your process? Would love to hear examples.

@artyom_chelbayev1 thank you so much for the amazing questions!

Yes, teams can see all their experiments metrics in one view and see nut just the overall accuracy but also the average and max latency and costs. We are also planning with the first user feedback to improve those metrics to views and dashboards that are bring more value for teams - what this mean might be different depending on the use case

Atm, we not have rollout strategy - but definitely a very valid point

Ah yes, this was also for us a very cool find. For solving problems like extracting phone numbers o addresses from written communication(like emails or chat messages) with a bit of prompt engineering most models did a pretty good job. The interesting part is that some models were twice as fast and twice as cheap as the "most inteligent" model. This could be a real game changes for companies running these kind of requests at large scale.

Thanks for the support!

Cata and the Lovelaice team