DeepEP

Powering DeepSeek-V3's MoE Performance

8 followers

Powering DeepSeek-V3's MoE Performance

8 followers

DeepEP, from DeepSeek, is the open-source communication library powering the DeepSeek-V3 MoE model. Optimized for Hopper GPUs, NVLink, and RDMA.

Flowtica Scribe

Hi everyone!

Sharing DeepEP, a new open-source communication library from DeepSeek AI, and this is the technology that powers their impressive DeepSeek-V3 model! It's a highly technical project, but crucial for anyone working with Mixture-of-Experts (MoE) models at scale.

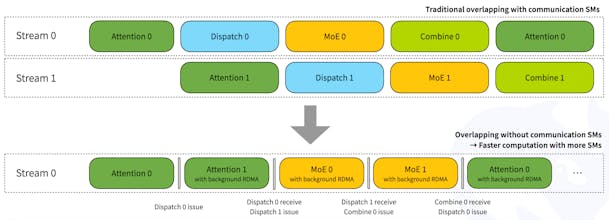

DeepEP is specifically designed to optimize the all-to-all communication that's critical for MoE training and inference. It's built for NVIDIA Hopper GPUs and takes advantage of NVLink and RDMA. It's even tailored to the "group-limited gating algorithm" used in DeepSeek-V3.

Essentially, DeepEP is how DeepSeek gets such great performance from their MoE models. They're open-sourcing the core communication technology that makes it all work.

DeepEP is the second product in DeepSeek's Open Source Week.