AiSanity

Monitors your AI for hallucinations. QA layer for AI apps

1 follower

Monitors your AI for hallucinations. QA layer for AI apps

1 follower

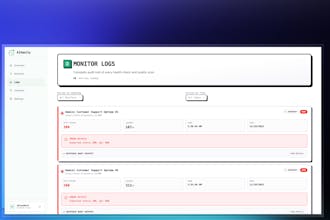

Stop relying solely on ‘200 OK’. AiSanity monitors your AI for hallucinations, model drift, and broken JSON schemas. It’s the essential QA layer for AI applications. With minimal features and excellent support, it’s user-friendly and perfect for Indie Hackers, Vibe Coders, and Solopreneurs.

Payment Required

Launch Team / Built With

Universal-3 Pro by AssemblyAI — The first promptable speech model for production

The first promptable speech model for production

Promoted

Maker

📌Report