Twigg

Git for LLMs - a Context Management Tool

321 followers

Git for LLMs - a Context Management Tool

321 followers

We built "Git for LLMs" - an improved LLM interface. Twigg is a context management tool designed for long term projects. We provide an interactive tree diagram of your entire LLM conversation, and complete control over the context you send to an LLM.

Twigg

1Page

@jamie_borland love the idea

Stencil by SUPERHANDS

Twigg

Thank you, really appreciate the feedback! We agree, it's a fascinating area!

Product Hunt Wrapped 2025

Love the Git-for-LLMs approach. Linear chats make long projects painful, so the tree view and branching from any node sound perfect. Complete context control is clutch. Curious how you handle token limits and merging branches. Congrats on launch, keen to try!

Twigg

@alexcloudstar I'm glad you like it!

Our pricing model is simple. We think hitting arbitrary rate limits is frustrating, so have aimed to make it as transparent as possible for the user to see their usage. For paid tiers, we take a small fee to cover server and platform costs, and the rest is converted into credits for the user. We have a dashboard showing exactly how many tokens they have used on each model, and how much of their balance is remaining. We charge exactly the same rates as the model providers themselves, no upcharging!

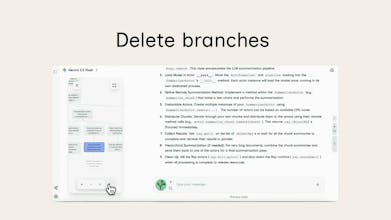

For merging branches, you can manipulate the tree structure to move nodes (and soon branches) around. You can cut, copy, and delete nodes to achieve exactly the context you desire.

I hope you enjoy using Twigg!

Twigg

@alexcloudstar @jamie_borland We've also noticed that branching massively reduces token usage. Given that you control your upstream context, far fewer irrelevant tokens get sent with every prompt. This makes your token limits go much further than they otherwise would!

As an addition, we offer a Bring Your Own Key tier, that gives you unlimited access once a key is submitted.

Agnes AI

The visual tree for branching conversations of Twigg is such a clever fix for messy, never-ending chats—I always lose track of context mid-project! Congrats team for the launch!

Twigg

@cruise_chen Great to hear you like it! Would love to hear some feedback too, anything in particular you would like to see added?

Twigg

Had so much fun working on this awesome project! I've been using it on a daily basis and it feels much more intuitive and prompt efficient than standard LLM interfaces.

We really believe that context management is the future, and Twigg is the solution! Can't wait for people to try it out and would love to hear people's feedback.

Twigg

It's been a fantastic experience working on Twigg. I've been using the later iterations of Twigg to work on Twigg and found it way easier to maintain context and organize my thoughts than standard LLM interfaces. Excited for people to try it out and to hear the feedback!

Stencil by SUPERHANDS

Congrats on the launch! Looks super useful

Twigg

@jamiesunde Thank you! I hope you enjoy using it, let us know any feedback!