Scop.ai

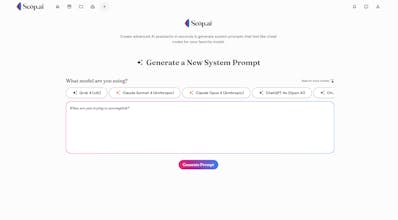

Generate task & model specific system prompts in seconds

29 followers

Generate task & model specific system prompts in seconds

29 followers

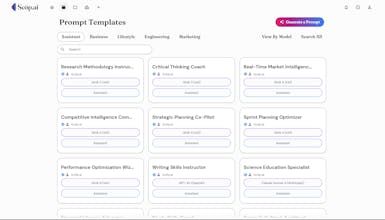

Generate task & model specific AI system prompts for leading LLMs, browse over 200 tested prompt templates, and collaborate on prompts with team members through shared prompt collections.

Grok 4 system prompt generation & templates are live on Scop.ai!

Generate custom task & model specific prompts, or browse a number of templates - each one engineered specifically for Grok 4's unique architecture, including:

Real-Time Market Intelligence Scanner

Research Paper Synthesizer Pro

Competitive Intelligence Command Center

Technology Stack Investigator

Patent Landscape Mapper

Executive Decision Support System

Strategic SWOT Analyzer

OKR Alignment Optimizer

Pitch Deck Perfection Engine

M&A Due Diligence Assistant

API Documentation Genius

Intelligent Code Review Bot

Bug Pattern Analyzer

Architecture Decision Advisor

Security Vulnerability Hunter

LinkedIn Virality Engineer

Newsletter Engagement Maximizer

Brand Voice Consistency Guardian

Story Arc Engineer

SEO Content Optimizer

Prompt Engineering Sensei

Model Performance Diagnostician

Workflow Automation Architect

AI Integration Specialist

Multi-Agent System Designer

+ more!

Each template leverages what makes Grok 4 special:

✅ Deep thinking

✅ Real-time data synthesis from web + X

✅ Code interpreter for verification

Sign up here → https://scop.ai/

This is such a huge time saver for prompt engineers. Are these templates optimized for GPT-4, Claude and Gemini?

@amelia_smith19 Yes!

Generated Prompts & templates are optimized specifically for GPT, Claude, Gemini, Grok and 10+ other LLMs with model-specific formatting and tuning. Each gets tailored to work best with your chosen model.

Also, see our model leaderboard for which model performs best for your task! :) 🏆

What an amazing resource for AI teams and developers. Is there a way to track or rate prompt performance based on usage?

@abigail_martinez1 Hi Abigail,

Great suggestion!

We're actually building prompt evaluations and performance tracking as part of our Q3 '25 rollout, but being able to rate prompts, see usage analytics, and track what's working best for your team would be super valuable!

We're definitely exploring adding more performance insights based on feedback like this. Any specific metrics would be most helpful for your workflow? 🙂

@dylan_scop_ai Can users set specific input/output formats for tasks like data extraction or summarization or are the templates mainly general purpose?

@emily_hernandez3

Hi Emily, thanks for the great question!

Currently our prompts cover both general purpose and specific use cases. You can customize input/output formats throughs instructions upon generation or by editing variable afterward, instructing Scop.ai to leave a placeholder.

We're super excited about adding more prompt fine tuning, temperature adjustment and custom variable features post-versioning, so you'll be able to fine-tune outputs even more precisely for your specific workflows!

Would love to hear more about how Scop.ai could support your specific data extraction or summarization tasks 🚀

How does the system handle model updates like from GPT-4 to GPT-4.5 that might change how a prompt behaves? Are there tools available to flag or revalidate prompts after an update?

@jacob_hernandez4

Hey Jacob,

One of the things that makes prompt management tricky for sure!

Right now our prompts are optimized per model based on the initial task requirements. If you want to convert a prompt to a different model, just select the new model when generating and give Scop.ai your current prompt - it will refactor based on the strengths of the new model, which handles most compatibility issues. :)

We're building versioning, then prompt evaluations elements of performance tracking & update recommendations in Q3 for even more precision & control. Appreciate the thoughtful question & looking forward to sharing more updates on this!

I love the concept. Can prompts have dynamic variables or placeholders that get filled in during runtime?

@sadie_scott Thank you Sadie! Currently you can instruct Scop.ai to add variable placeholders during initial generation, or manually add them afterward.

Enhanced custom variable features and fine-tuning controls plus versioning for even more dynamic control are coming soon as well 🛠️

Amazing concept. How do you manage the differences in LLM behavior across providers like OpenAI, Claude or Mistral?

@logan_king

Hi Logan!

Our generation process creates custom prompts tailored to each model's specific strengths and benchmarks - so a Claude prompt leverages its reasoning abilities differently than a GPT prompt optimizes for creativity, for example!

We also use one-shot and multi-shot testing on proven prompts for generations to ensure they work consistently across providers, and often update our own generation instructions based on real testing results!

Curious which models are you working with most?