Ruma

Your friendly assistant—personalized, private

3 followers

Your friendly assistant—personalized, private

3 followers

Ruma is a blazing-fast, privacy-first offline personal assistant for macOS. Control your Mac, automate tasks, and talk to your system – all without the cloud.

Hey PH! I’m Pradhumn, creator of Ruma—your privacy-first AI co-pilot right in your menu bar. I built Ruma because I was tired of context-less chat windows and cloud-only models.

Demo Link : https://youtu.be/NtNhEieq-54

What’s here today:

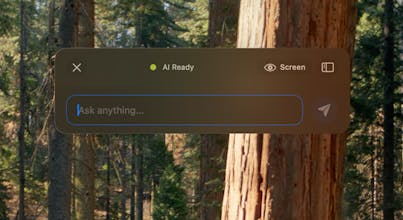

1) Global hot-key chat panel: Invoke Ruma anywhere on macOS (⌘ ⇧ A by default) and keep working without switching apps.

2) API-key model support: Plug in OpenAI, Anthropic, or any other LLM API and start chatting immediately.

Hugging Face models on Apple Silicon: Run dozens of HF weights (Llama 2, Vicuna, Mistral, Falcon, GPT4All, etc.) locally via MLX Engine—no cloud calls needed.

3) Screen-region reasoning: Select any part of your screen—PDF, chart, code snippet—and ask Ruma to explain or summarize it.

4) Persistent memory: Local, disk-backed memory so Ruma never forgets your past projects or preferences.

Coming Soon :-

- Document Analysis and Large, Advanced Research Integration which can work months without forgetting

I’m most proud of how you can now summon Ruma with a keystroke, point it at any model you like (cloud or local), and have seamless, multimodal chats—all without ever losing context to the cloud. Can’t wait to hear which upcoming feature you’re most excited about! 🚀