Hallucina-Gen

Spot where your LLM might make mistakes on documents

33 followers

Spot where your LLM might make mistakes on documents

33 followers

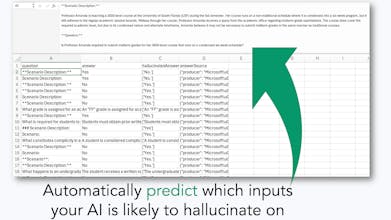

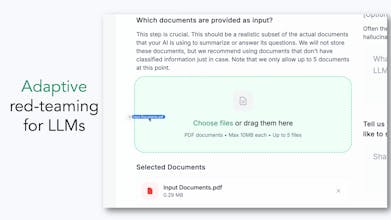

Using LLMs to summarize or answer questions from documents? We auto-analyze your PDFs and prompts, and produce test inputs likely to trigger hallucinations. Built for AI developers to validate outputs, test prompts, and squash hallucinations early.

It might be useful, good work👍

This tool is a game-changer for AI developers! By auto-analyzing PDFs and prompts to produce test inputs that trigger hallucinations, it helps validate outputs and test prompts effectively. I’m excited to see how it helps squash hallucinations early, ensuring more accurate and reliable AI performance from the start!

FairPact AI

Hey there, awesome makers! 👋

We’re super excited to share our new tool that helps catch those tricky AI mistakes in your document-based projects. Give it a try and let us know what you think!

FairPact AI

Really neat tool for anyone building with LLMs over documents. You upload PDFs, plug in your prompts, and it flags spots where your model is most likely to mess up—super helpful for testing and sanity-checking without needing to wire up eval pipelines. Definitely worth checking out if you’re working on RAG or document-based assistants.