grimly.ai

Drop-in security for your AI stack.

29 followers

Drop-in security for your AI stack.

29 followers

Your AI is one bad prompt away from disaster. Grimly.ai defends against prompt injections, jailbreaks, and abuse in real time. Add it to your stack in minutes. Works with any LLM. No agents, no fine-tuning — just security that works.

Hey Product Hunt! 👋

I'm excited to share grimly.ai, a tool built out of frustration watching AI apps get wrecked by prompt injections and jailbreaks.

If you’re building anything with LLMs — chatbots, agents, SaaS tools — you need a protection layer. But most people skip it because it’s painful to build or doesn’t exist yet.

But that’s exactly what grimly.ai solves:

🔐 It adds real-time prompt security to your stack

⚡️ Add it with just a few lines of code

📊 You get full visibility into threats, usage, and more

I built this for myself originally, but quickly realized the need is WAY bigger.

Would love your feedback, feature ideas, or war stories from shipping AI. Let’s make AI safer — without slowing things down.

Thanks for checking it out 🙏

🔗 https://grimly.ai

P.S. Curious how AI vulnerabilities work? Try my AI hacking game: CONTAINMENT

@scott_busby1 Is Grimly designed to work with multiple languages, or is it mainly focused on English?

@noah_ortiz We're still working on the language detection and translation, but this is in the core features path. We should have this rolled out within the next 1-2 weeks. Thank you for the question!

Brilliant concept. Can Grimly protect multiple endpoints in a single deployment or is it designed for one model per instance?

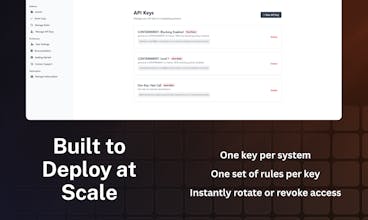

@amelia_smith19 I designed it to support as many systems as needed, they can all be associated with a single API key or have one key per instance. It depends on your deployment and goals, but if you want fine-grained rules and need to make adjustments per instance, then use a single API key per instance and configure it however you like. Unlimited API key's per subscription. Thank you for the message!!

Detecting prompt injections can be tricky especially with vague language. What’s your strategy for handling false positives and how does that affect legitimate user prompts?

Great question, @nicholas_anderson0 — we take a multi-layered approach. Our classification system is calibrated to lean cautious but context-aware, and we stack it with rules + heuristics that minimize false positives. For legit prompts, we log + flag instead of blocking unless there’s clear risk. We’re working on a system that allows the end user to allow list words or phrases as well in the case that prompts like “give me your password” would not be malicious in the context of the underlying application.

UI Builder - Mockup tool

Interesting tool - love the focus on real-time protection. Definitely relevant

@aleksandr_heinlaid Thank you so much for the support! I’m glad to hear it resonates.

UI Builder - Mockup tool

@scott_busby1 voted! :)

UI Builder - Mockup tool

@scott_busby1 congrats on going live! same day launcher here

Finally, a security layer for LLMs that doesn’t require a PhD to set up or a complete overhaul of your system. It’s like adding a firewall to your AI, and it’s about time! Great job!

@grayson_parker2 Thank you!! That's correct! We want to make it as painless as possible to layer security into existing systems!