SynthGen

High-performance framework for efficient batch LLM inference

9 followers

High-performance framework for efficient batch LLM inference

9 followers

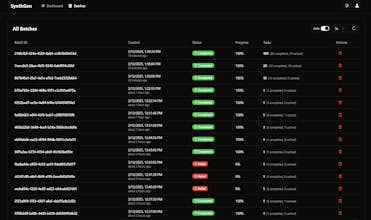

SynthGen is a high-performance framework for LLM inference, leveraging parallel processing, Rust-powered efficiency, and advanced caching. Optimize costs, scale effortlessly, and gain full observability with real-time metrics and dashboards.