Launching today

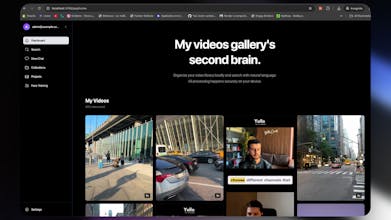

Edit Mind

AI-Powered Local Video Search & Analysis

49 followers

AI-Powered Local Video Search & Analysis

49 followers

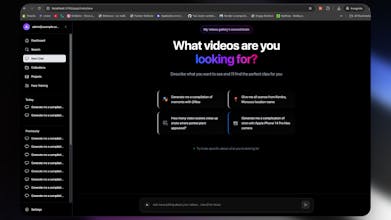

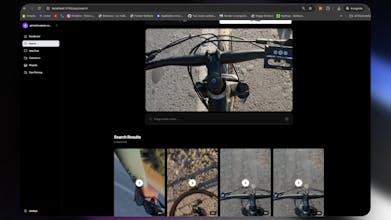

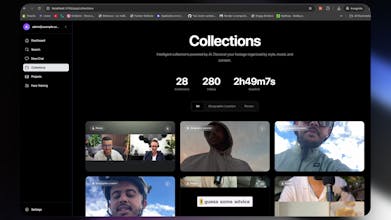

A project built to help you transcribe, analayze and index your personal video library to help you search for the "exact" part of the video you're looking for with the help of local ML models and a local vector database. Your videos will never leave your computer or server (Docker support).

Makerlapse

Hey 👋,

I'm Ilias Haddad, a software developer and content creator (on the side). I love creating and capturing videos, but with that, I accumulated a lot of videos, which makes it hard for me to manage and search across all of them.

A project that started as a personal solution that transcribes videos only using Whisper, envolles to include video frame analysis, multi-modal embedding, chat assistant, image and text search.

What I need from you:

Feedback on the UX/workflow

Bug reports and edge cases

Ideas for new analyzer plugins

Contributors interested in local-first AI tools

The tech stack is Express.js + React Router V7 + Python (PyTorch, Whisper, YOLO) + PostgreSQL + ChromaDB.

Full Docker support for easy deployment.

Try it, break it, and let me know what you think!

Github Link: https://github.com/IliasHad/edit-mind

Showcase Video: https://youtu.be/YrVaJ33qmtg?si=BDjJDpJKr_VIHqEE

The local vector database really speeds up searching through videos on me. I didn’t expect it to be this fast. ⚡

Makerlapse

@new_user___01920267f18557962f8fee7 That's great, thank you for your feedback

Local video search tends to buckle when libraries hit tens of thousands of clips and you need fast reindexing plus precise timecodes for “exact moment” retrieval.

A solid pattern is segment-level indexing with VAD, word-level alignment and diarization via WhisperX on top of faster-whisper, then store vectors + metadata with strong persistence (often Postgres + pgvector) to keep one backup and one query plane. :contentReference[oaicite:0]{index=0}

How are you modeling segment boundaries and syncing Postgres IDs to Chroma collections today, and do you plan a deterministic reindex pipeline for model upgrades without breaking existing links?

How does the search latency look once the library grows to thousands of videos?

Makerlapse

@lightninglx It depends. We have two options: search by image, which is fast (about 500ms to 2 seconds). After all, it's searching across a vector collection that we have visual frames. For text query, we're searching across 3 vector collections (Text, Visual, and Audio), and this one could be long, like I have 1200 videos, and the search could take up to 10 seconds based on how complex the query is.

The local video library seems to be limited, is there a way to open it to a search in youtube (like top 30 videos) etc

Makerlapse

@david_chen37 Can you please elaborate more? Would you like to search YouTube videos because this project is designed for you local video library?