ModelRiver

One AI API for production - streaming, failover, logs

81 followers

One AI API for production - streaming, failover, logs

81 followers

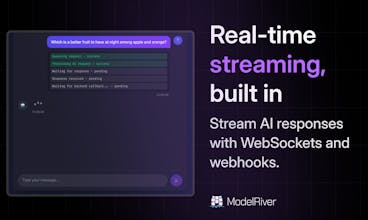

Unified AI API gateway for multiple LLM providers with streaming, custom configs, built-in packages, routing, rate limits, failover, and analytics.

Hi Product Hunt,

We’re Akarsh and Vishal, the co-founders of ModelRiver. Thanks for checking it out.

Why we built ModelRiver

We’ve both spent a lot of time building AI-powered products, and we kept running into the same problems. Every AI provider came with a different SDK and set of quirks. Streaming behaved differently across models. Switching providers meant rewriting code. And when a provider had an outage, the application went down with it. Debugging was also painful because logs and usage were spread across multiple dashboards.

We realized we were spending more time maintaining AI infrastructure than building the actual product.

What we wanted to solve

We set out to build a single, OpenAI-compatible API that works across providers, without locking teams into one vendor. As we spoke with more developers, it became clear that abstraction alone wasn’t enough. Production systems need:

- consistent streaming

- automatic failover and retries

- unified logs and usage

- the ability to bring your own API keys

ModelRiver is our attempt to make AI infrastructure reliable, observable, and easy to reason about.

How the product evolved

Early versions focused mainly on multi-provider support. Feedback from early users pushed us toward reliability and observability. Streaming stability, failover behavior, and logs became core features rather than optional add-ons.

ModelRiver is still early, but it’s already being used in real applications, and we’re iterating quickly based on real-world feedback.

We’d love to hear your thoughts, questions, or what you’d like to see next.

@akarshc This is very cool Akarsh, congrats on the launch. How do you handle HA for yourselves? Also, given the different flavours of streaming (openai v anthropic v whoever) for prod, how do you deal with that?

@zolani_matebese Thanks, really appreciate that.

On high availability, we treat ModelRiver itself like any other piece of critical infrastructure. The control plane and data plane are separated, and request routing is designed to be stateless so we can scale horizontally and absorb node failures. At the provider layer, we actively track request health and timeouts, and route away from degraded providers or models automatically rather than waiting for hard failures. The goal is that a single provider issue does not become a customer-visible outage.

Streaming differences were one of the harder parts. Each provider has slightly different semantics around chunking, termination signals, and error states. Internally, we normalize everything into a single streaming contract and enforce that contract at the edge. That way, applications see consistent behavior regardless of which provider is behind the request. When a provider has quirks or edge cases, we handle those internally rather than pushing that complexity to users.

In practice, this lets teams test streaming once and trust that it behaves the same way in production, even if the underlying model changes.

@akarshc Thanks for the comprehensive answer. signed up to do some testing.

Another area we spent a lot of time on is integration testing.

When teams are wiring AI into an application, most of the cost and friction happens before anything ships. You want to test request flow, streaming, retries, fallbacks, and error handling, but you don’t want to burn real credits while doing that.

In ModelRiver, users can turn on a testing mode that lets them exercise the full integration without costing them anything. This makes it possible to:

- validate request and response handling

- test streaming and partial responses

- simulate retries and failover paths

- verify logging and usage reporting

- run CI or staging tests safely

The goal is to let teams treat AI integrations like any other dependency. You should be able to test them thoroughly before production, without worrying about cost or side effects.

If you’re doing integration testing with LLMs today, I’d be curious to hear what’s been painful or missing.

Hey,

Unified AI API gateways that handle multi-provider integration, automatic failover, analytics and workflows solve a real pain for engineering teams trying to ship AI features reliably and cost-effectively, especially in production environments where uptime and predictability matter.

One area the current page could sharpen is connecting those technical wins to measurable product outcomes, for example, quicker time to ship new AI capabilities, fewer incidents from provider outages, or predictable cost per request across providers. Making the business impact as clear as the engineering value helps convert interest into adoption beyond technical audiences.

I help SaaS and tooling founders refine positioning and landing copy so that infrastructure products are seen not just as tech wins but as business enablers that boost velocity and reduce risk. If you decide to strengthen how ModelRiver communicates that, I can help shape those narratives.

Best,

Paul

@akarshc Glad it was useful.

What I’m seeing with products like ModelRiver is that once the technical layer is solid, the biggest lift usually comes from making the business impact instantly legible to non-engineering decision-makers (founders, PMs, CTOs thinking in risk/cost terms).

If it’s helpful, a common next step teams take is a focused landing pass that:

translates infra features into risk reduction, cost predictability, and shipping velocity

sharpens the hero + above-the-fold narrative for mixed technical / business audiences

aligns the page with how buyers actually justify AI infra internally

No rush on this, just sharing the pattern I see working well for AI infrastructure tools at this stage.

If you decide to pressure-test ModelRiver’s messaging against those outcomes, happy to take a closer look.

Appreciate the thoughtful response, and best of luck with the next iteration.

@akarshc Thanks, really appreciate the thoughtful note.

You articulated the core challenge very clearly: infra tools like ModelRiver don’t get chosen on features, they get justified on predictability, risk reduction, and shipping velocity. Once that framing is obvious above the fold, everything downstream tends to convert more cleanly.

When you do get to the pressure-testing phase, I’m happy to look at it with a critical eye, especially around:

how quickly a non-engineering decision-maker “gets it”

whether the hero and first scroll anchor the cost / speed / reliability narrative

where the story can be tightened to support internal buy-in, not just developer interest

No rush at all, feel free to reach out once you have something concrete to react to.

Glad the perspective was useful, and best of luck with the next iteration.

Vieww

Looks interesting 👍

Do you have any real-world production use cases already using ModelRiver?

@akhil4755 Here’s an example github repository for implementation reference.

github repo: https://github.com/modelriver/modelriver-chatbot-demo

Is ModelRiver free to use, or are there any charges or fees that users should be aware of?

This hits a real pain point for teams building production AI. Juggling different SDKs, streaming quirks, outages, and scattered logs adds a lot of overhead that most people underestimate until they ship.

The focus on consistent streaming, automatic failover, and unified logs feels especially practical. Being able to switch providers or survive outages without rewriting code is a big deal in real systems, not just demos.

Curious how teams typically adopt this. Whether they start with observability first or use it as a full routing layer from day one. Solid work solving an unglamorous but important problem.

@sujal_thaker Thanks Sujal!

Appreciate that, you’re describing exactly the class of problems that motivated this.

In practice, most teams start by putting it in front of one or two streaming paths where failures or debugging pain are already obvious, rather than flipping everything at once. Observability tends to be the first thing people lean on, because it’s useful even if you don’t change how requests are routed yet.

Once that’s in place, some teams expand it into a fuller routing layer for streaming calls, especially when they start juggling multiple providers or need better isolation from outages. It’s intentionally designed to be incremental, so you don’t have to commit to it everywhere on day one.

Hi, quick follow-up on my last note.

Curious whether you’ve already started pressure-testing the risk / cost / shipping-velocity framing on the landing, or if that’s still queued for a later iteration.

If you have an early draft or even a rough hero direction, happy to give quick, candid feedback from a buyer-decision lens.

Best,

Paul